When to Use Apache Spark

Apache Spark spread quickly in the world thanks to its simplicity and powerful processing engine. There are numerous situations where Spark is helpful.

Sep 24, 2020 • 4 Minute Read

Introduction

This guide will explain the cases in which you should use Apache Spark for projects or other professional initiatives.

In order to know when the use of Spark is appropriate, you must first understand what it is. Check out this Pluralsight guide for more information on Apache Spark.

Ideal Projects for Spark

Apache Spark spread quickly in the world thanks to its simplicity and powerful processing engine. There are numerous situations where Spark is helpful.

-

Big data in the cloud: Thanks to Databricks, if your requirement is to work with big data in the cloud and take advantage of the technologies of each provider (Azure, AWS), it is very easy to set up Apache Spark with its Data Lake technologies to decouple processing and storage.

-

Multiple work teams: When your team has data engineers, data scientists, programmers, and BI analysts who must work together, you need a unified development platform. Spark, thanks to notebooks, allows your team to work together.

-

Reduce learning time: Thanks to Apache Spark working with different languages (Scala, Python, SQL, etc.), the learning curve is lower if your project must start as soon as possible.

-

Batch and streaming tasks: If your project, product, or service requires both batch and real-time processing, instead of having a Big Data tool for each type of task, you can do it with Apache Spark and its libraries.

Recommendations

Apache Spark is a powerful tool for all kinds of big data projects. But still, there are certain recommendations that you should keep in mind if you want to take advantage of Spark's maximum potential:

-

Koalas: If your engineers are used to using Python with pandas in their projects for data processing, instead of having to relearn everything from scratch, they can start with Koalas, which implements the pandas API on Apache Spark in order to run the same pandas methods in a distributed way.

-

Delta: Delta Lake is the technology that allows your Data Lakes on Spark, both for batch and streaming processes, to execute ACID transactions in a managed way, that is, without having to worry too much about the files. This is ideal if you have data that will constantly be changing, versioning, or inserting.

-

Containers (Docker/Kubernetes): If you are not going to use GPUs, it may be better to use containers to mount your Apache Spark cluster, as this will allow you to scale faster than adding more virtual machines.

When Not to Use Spark

Despite the potential of Apache Spark for many use cases, another big data engine may be required for certain specialized needs. In the following cases, other technology is recommended instead of Spark:

-

Ingesting data in a publish-subscribe model: In those cases, you have multiple sources and multiple destinations moving millions of data in a short time. For this model, Spark is not recommended, and it is better to use Apache Kafka (then, you can use Spark to receive the data from Kafka).

-

Low computing capacity: The default processing on Apache Spark is in the cluster memory. If your cluster, or virtual machines, has little computing capacity, you should go for other alternatives, such as Apache Hadoop.

Learn More

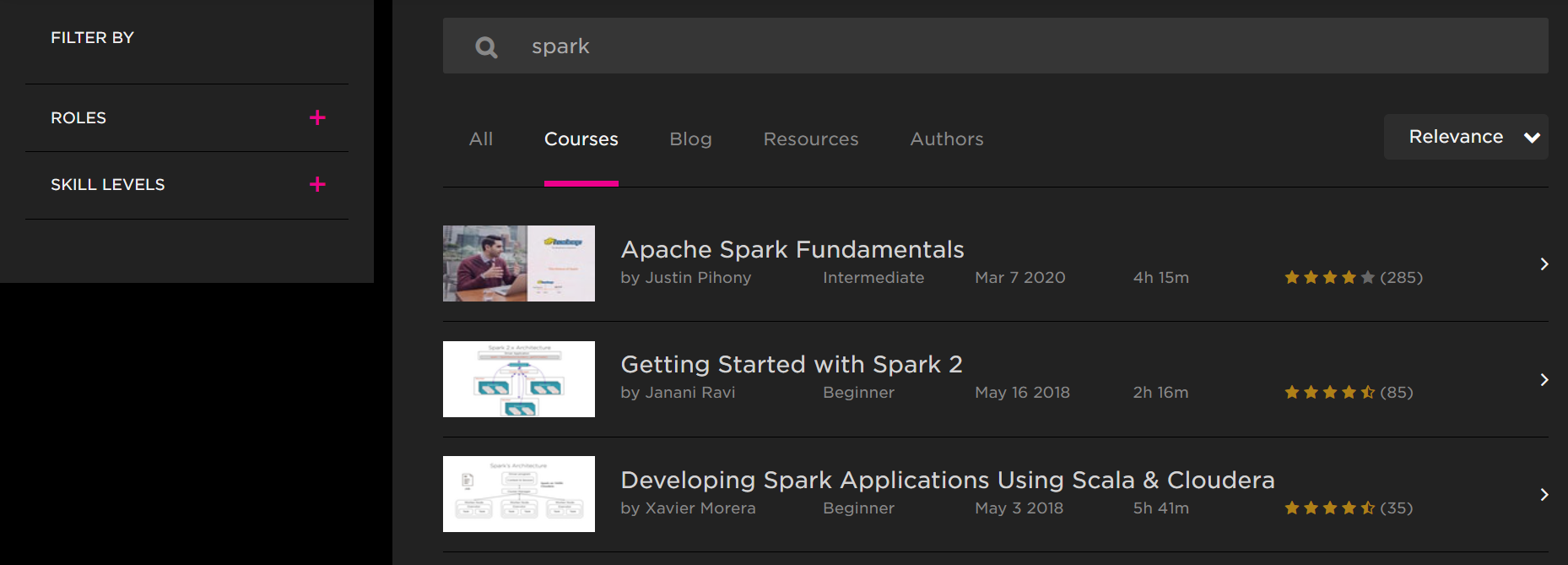

If you want to gain skills in Apache Spark, Pluralsight has a series of courses that will allow you to advance in your career and participate in large Big Data initiatives:

Conclusion

As you can see, Apache Spark is a unified big data and analytics platform that works for almost all types of projects. The important thing is to know how to use it correctly, which you can do by reviewing the content in the courses listed above.

I wish you great success with your big data projects!

Advance your tech skills today

Access courses on AI, cloud, data, security, and more—all led by industry experts.