Computer Vision with Microsoft Azure

Jul 8, 2020 • 10 Minute Read

Introduction

Computer vision is one of the most popular uses of artificial intelligence. It allows computers to analyze visual images and find features more accurately and efficiently than a human would. It's also very difficult to implement correctly. Computer vision models require tremendous resources in computing, time, and knowledge. As computer vision becomes more accepted and even expected in today's modern apps, how can developers at small companies without the resources of a software giant keep up?

That's where Microsoft Azure Cognitive Services come in. Using the Computer Vision service, developers of any app can add computer vision with little or no knowledge of machine learning. For example, the classic "Hot Dog or No Hot Dog" app could easily be implemented with the image analysis service. And adult content detection could be used to make sure that age-appropriate images are uploaded to an app.

The Azure Cognitive Services are implemented as REST APIs. These APIs expose the power of models that Microsoft has spent the time and resources to train. You can send image data to these APIs, and Microsoft will perform some computer vision magic, charge you a little bit of money (though it's free to get started), and send the results back to you.

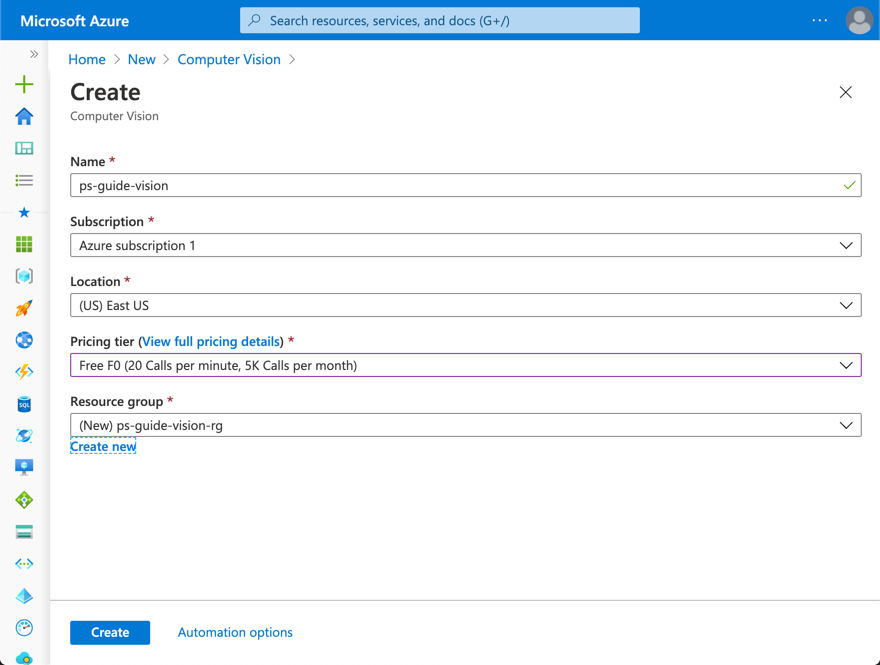

Setup

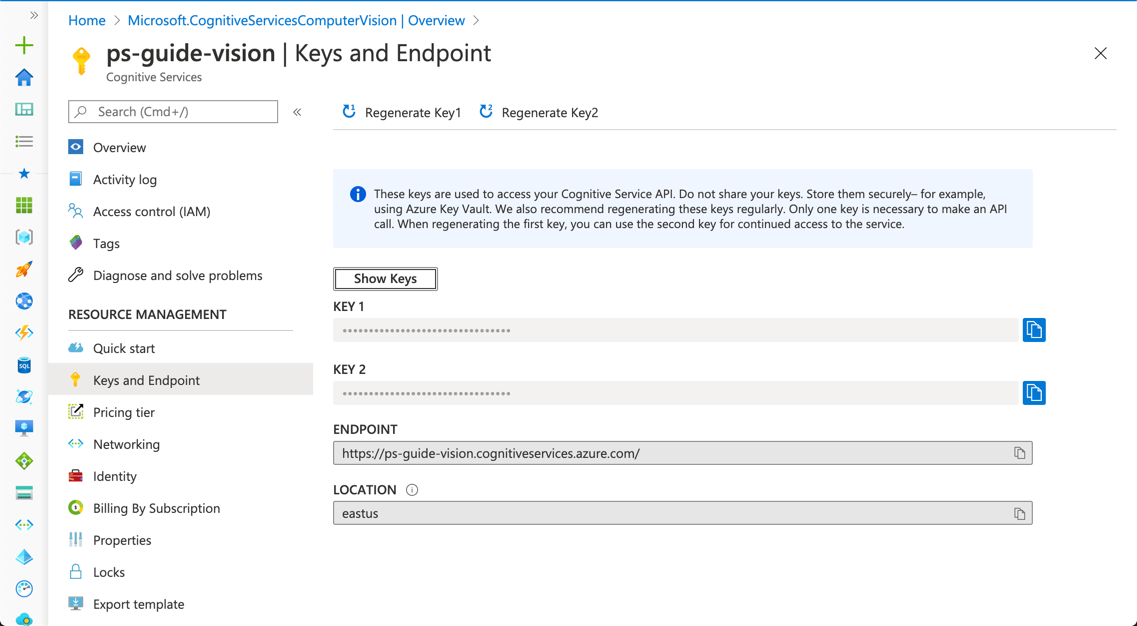

In the overview for the resource, click the link to manage the keys. You'll see two keys and an endpoint. The keys will authenticate you as a user of the service. Treat them like passwords.

Image Analysis

This guide will use Python to demonstrate how to use the Computer Vision service. Software development kits also support other languages, including C#, Java, and JavaScript. You can also send data directly to the API endpoints using a library like requests. To use the Python SDK, you'll need to install it.

$ pip install azure-cognitiveservices-vision-computervision

Next, create a ComputerVisionClient using the key and endpoint from the Azure Portal.

from azure.cognitiveservices.vision.computervision import ComputerVisionClient

from msrest.authentication import CognitiveServicesCredentials

client = ComputerVisionClient(ENDPOINT, CognitiveServicesCredentials(KEY))

Use the client to describe an image by passing the URL of an image to the describe_image method.

description = client.describe_image(IMAGE_URL)

If the Computer Vision service was able to describe the image, it will return a list of captions.

for caption in description.captions:

print(caption.text)

Azure describes this picture as "a room filled with furniture and a large window."

And that's all there is to it. You can use the results in any app with no knowledge of computer vision!

Face Detection

The same client is capable of detecting faces in an image. Simply provide an image URL to the analyze_image method. The second parameter is a list features to detect. To detect faces, provide a list containing a single string, faces.

face_results = client.analyze_image(FACE_IMAGE_URL, ['faces'])

The results include a list named faces. Each face detected, if any, will include the age, gender, and face_rectangle or bounds.

for face in face_results.faces:

print('{} year old {}'.format(face.age, face.gender))

print(face.face_rectangle.as_dict())

Azure identifies this picture as a 21-year-old female.

Keep in mind that Azure Cognitive Services also includes a dedicated Face service. It does everything the Computer Vision service does and can also detect emotions, facial hair, glasses. It can even identify people detected in images.

Other Image Analysis Features

In a perfect world, people would not upload inappropriate content to apps. In the real world, the Azure Computer Vision service can detect and score adult, racy, and gory content in images. Use the adult feature with the analyze_image method.

adult_results = client.analyze_image(ADULT_IMAGE_URL, ['adult'])

The results include a bool if the content is considered adult, racy, or gory. It also includes a confidence score between 0.0 and 1.0 based on how likely the content is to be adult, racy, or gory.

print('Image is{} adult with a confidence of {}'.format('' if adult_results.adult.is_adult_content else ' not', adult_results.adult.adult_score))

I'm not going to demo this one. You'll just have to trust that it works.

The categories feature will return a category from one of 86 in an image taxonomy. Examples include "building_stair" and "plant_tree". A complete list can be found at https://docs.microsoft.com/en-us/azure/cognitive-services/computer-vision/category-taxonomy.

category_results =

client.analyze_image(CATEGORY_IMAGE_URL, ['categories'])

Each category, if any, in the categories field has a name of a category the image belongs to. As with the adult content detector, a score indicates the confidence that the category applies to the image.

for category in category_results.categories:

print('Image is in the {} category with a confidence of {}'

.format(category_results.name, category_results.score))

Azure placed this picture of a panda bear in the "animal_panda" category with a confidence level of 0.99609375, which is almost certain.

The pattern continues for brands.

brand_results = client.analyze_image(BRAND_IMAGE_URL, ['brands'])

Each brand in the brands list has a name and a confidence score. This time, the bounds of the brand are included in rectangle.

print('The brand for {} was found in the image with a confidence of {}'

.format(brand_results.name, brand_results.confidence))

print(brand_results.rectange.as_dict())

Azure finds a brand in this picture.

The brand is "Coca-Cola" with a confidence of 85.7%.

The service can also detect dominant and accent colors in an image with the color feature.

color_results = client.analyze_image(COLOR_IMAGE_URL, ['color'])

The is_bw_img field is a bool determining whether the image is black and white or full color. The dominant_colors and accent_color fields hold the dominant and accent colors, respectively. The dominant colors are from a set of twelve named colors while the accent color is a hexadecimal value. All color information is stored in the color field of the results.

print('The image is {}'

.format('black and white' if color_results.color.is_bw_img else 'color'))

print('Dominant colors: {}'.format(color_results.color.dominant_colors))

print('Accent color: {}'.format(color_results.color.accent_color))

According to Azure, the dominant colors in this image are purple, black, and white.

Text Recognition

The Computer Vision service can also recognize text, both printed and handwritten. This one works differently from the other features as text recognition can take more time. Thus the final results are not returned by the read method.

read_operation = client.read(OCR_IMAGE_URL, raw=True)

The headers of the response contain a resource with an ID for the operation at the end.

id_ = read_operation.headers['Operation-Location'].split('/')[-1]

Pass the id_ to the get_read_result method to retrieve the status of the text recognition operation.

read_results = client.get_read_result(id_)

print(read_results.status)

If the status is Succeeded, then you can go on to the next step. The recognition_results will have a set of lines, and the individual line will have text.

first_result = read_results.analyze_result.read_results[0]

for line in first_result.lines:

print(line.text)

And from this image, Azure detected the text 'Azure Cognitive Services'.

The bounding box of each line can also be found in bounding_box. In the real world, you would poll the status of the read results. This could take some time depending on the amount of text to be read.

Conclusion

The Computer Vision service in Azure Cognitive Services adds computer vision to almost any app. It supports several different languages with SDKs, or your app can call the API endpoint directly. Best of all, you need little if any knowledge or experience with computer vision and machine learning to use it. If you can write code that calls a REST API, you're all set. And it's cost-efficient, many times costing fractions of a penny per transaction with a free quota to experiment. Thanks for reading!

Advance your tech skills today

Access courses on AI, cloud, data, security, and more—all led by industry experts.