Computer Vision with Google Cloud Vision

Sep 3, 2020 • 10 Minute Read

Introduction

Information can be contained in many formats. One of the more difficult formats to interpret automatically is images. The field of computer vision studies how to do this, and amazing breakthroughs have been made. The downside is that it requires a lot of data (images) and a lot of computing power to train the models and a lot of maintenance to deploy and operationalize the models. Most small to medium size companies just don't have the resources to invest. But at the same time, their customers are going to be seeing computer vision apps from larger software firms. How can they compete?

The Google Cloud Vision API is the answer. Google has done the hard work of training computer vision models with lots of images using Google's vast compute resources. It has also exposed these models via REST APIs and even made SDKs available with client libraries in multiple languages so you don't have to know anything about REST, much less computer vision. You just send Google an image and request a task, such as object detection or face detection. Then Google will charge you a little money and send the results back. You can easily use the Cloud Vision API to integrate computer vision into your apps. This guide shows you how.

Setup

The Google Cloud Vision API supports several languages, including C#, Java, JavaScript, and Python. You can also directly call the APIs using REST or RPC, and there is a command client for Google Cloud that can access the Vision API. This guide will use the Python client library for code samples.

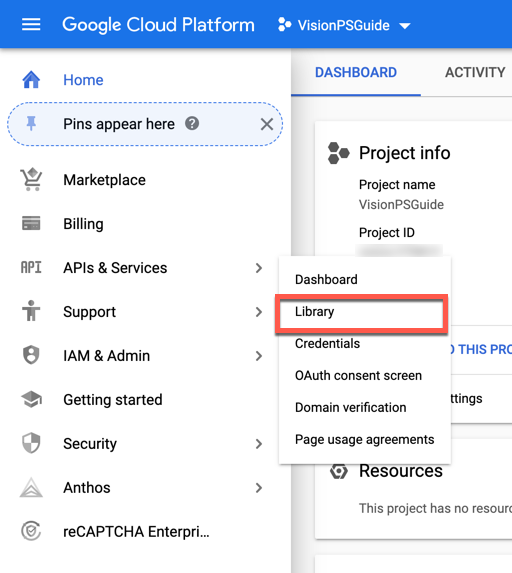

The API must be enabled for a project in the Google Cloud Platform Console online. In a browser, navigate to https://console.cloud.google.com. At the top of the page, select or create a new project. Expand the menu on the left and select APIs & Services -> Library.

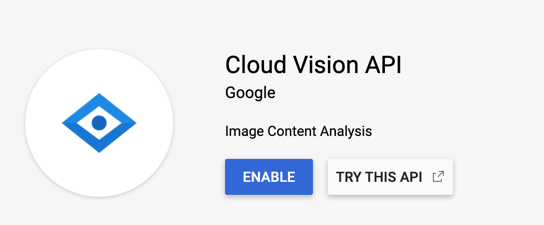

Search for Cloud Vision API. Click on the result for Cloud Vision API and click the Enable button.

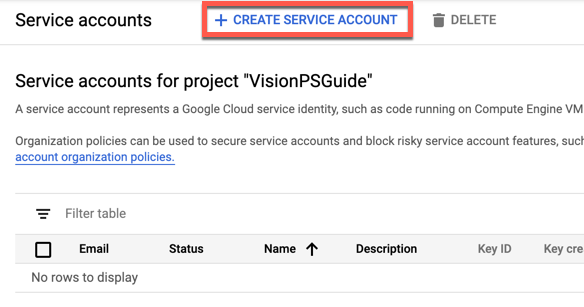

To authenticate your app with the Vision API, you'll need to create a service account. Return to the Console home page. In the menu on the left, under IAM & Admin, select Service Accounts. At the top of the page click the Create Service Account button.

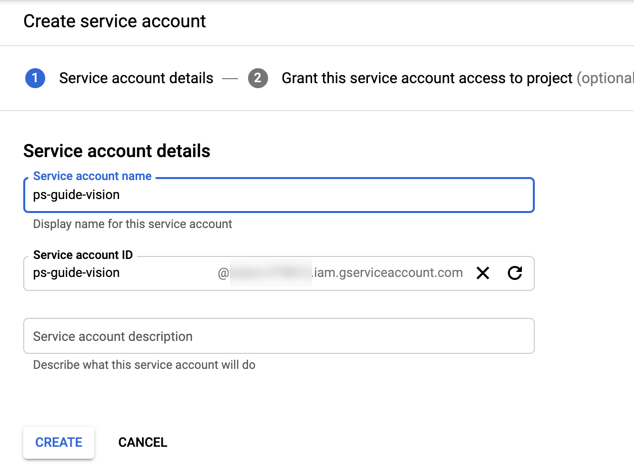

Give the service account a name and click the Create button.

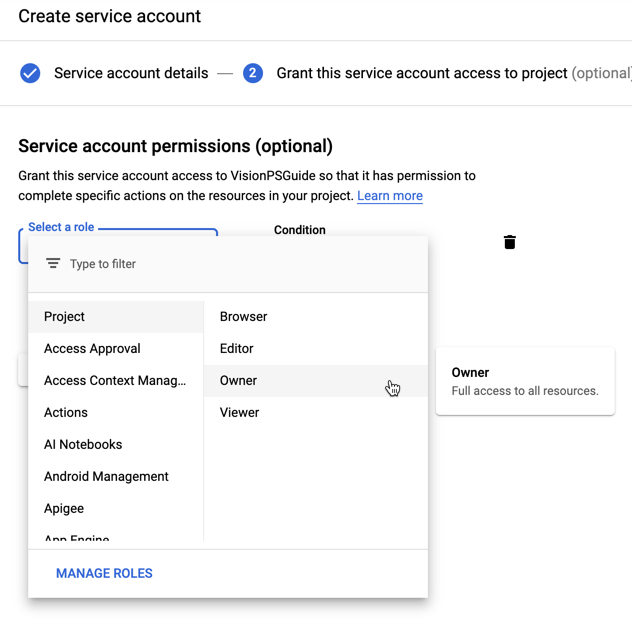

For permissions, assign a role of Project and Owner and click the Continue button.

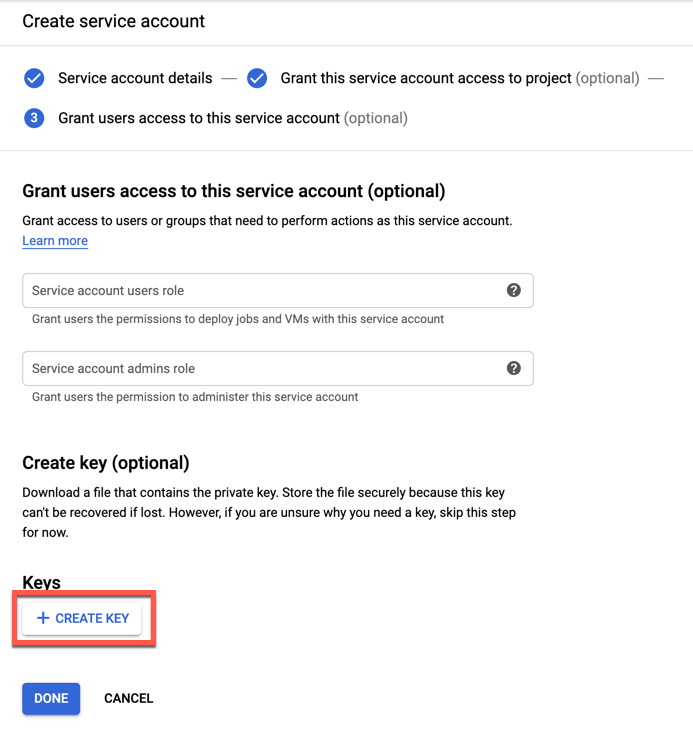

On the next screen click the Create Key button.

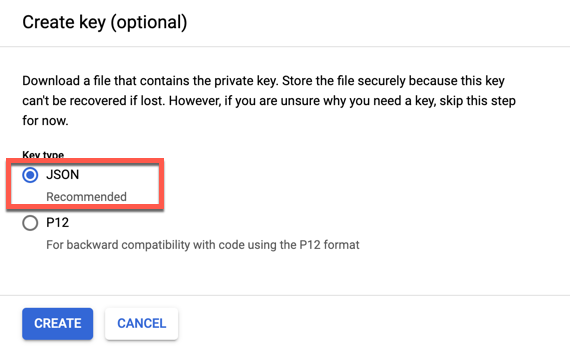

Select a key type of JSON. Click the Create button.

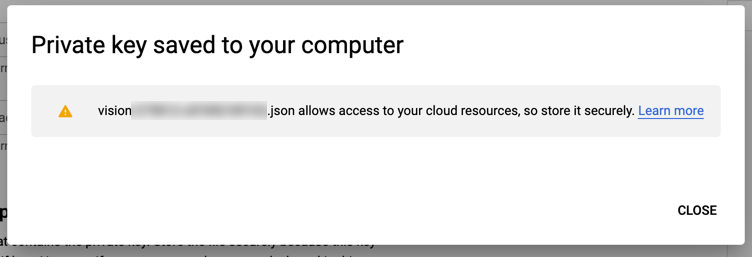

This will download a JSON file to your computer that contains a private key which can be used to authenticate your app.

Store the file in a safe place because you can't generate it again.

The client library will expect an environment variable called GOOGLE_APPLICATION_CREDENTIALS with the path to the JSON file that you downloaded. Finally, you'll need to install the client library for Python using pip.

$ pip install google-cloud-vision

Using the Cloud Vision API

The first example will use the client library to attach labels to an image based on its content. You'll need to import a couple of Python modules to use the API.

from google.cloud import vision

from google.cloud.vision import types

The client that communicates with the API is of type ImageAnnotatorClient.

client = vision.ImageAnnotatorClient()

Image content from a file is passed to the Image type that the API expects.

image = types.Image(content=IMAGE_CONTENT)

Now you can make a call to the API with the label_detection method.

label_results = client.label_detection(image=image)

The labels themselves are in the label_annotations.

for label in label_results.label_annotations:

print(f'{label.description} - {label.score}')

The description contains the label and the score is a confidence score based on the relevance of the label to the image.

Cloud Vision assigned these labels to this image.

Source: Unsplash

('Chair'

From these labels, the API is 95% sure there is a chair in the picture and 80% sure there is a floor.

Facial Detection

Using the same client and a different image, the Cloud Vision API can detect faces.

face_results = client.face_detection(image=image)

for face in face_results.face_annotations:

// use face

Each face has a bounding_poly that includes the coordinates of a polygon that represents the bounds of the face. There are also landmarks that represent the coordinates of facial features such as eyes, ears, mouth, and nose. Finally, there are a number of values representing the likelihood that a face is expressing joy, sorrow, anger, or surprise. These are integer values with five levels from 1 for 'very unlikely' to 5 for 'very likely' and 0 for 'unknown'

The API is of the opinion that it is very unlikely that the face in this image is angry.

Source: Unsplash

On the other hand, the likelihood of headwear is 4, or 'likely'. If the picture did not crop the hat, the confidence might have been higher.

Explicit Content Detection

The API can also detect inappropriate content that malicious users and trolls may try to upload to your app. Google calls this SafeSearch Detection. Call the safe_search_detection method on the client.

safe_search_results = client.safe_search_detection(image=image)

The results contain the likelihood that the image contains each of five content types:

- adult

- medical

- spoof

- violence

- racy

The values are the same levels used for facial detection.

I'm not going to demo this one. You'll have to trust that it works.

Logo Detection

The Cloud Vision API models have been trained on popular logos of brand names. The logos are returned in logo_annotations, and each logo has a description and score representing the confidence of the predicted brand name.

logo_results = client.logo_detection(image=image)

for logo in logo_results.logo_annotations:

print(logo.description, logo.score)

What brand did the API find in this image?

Source: Unsplash

It correctly identified Coca-Cola. And notice that the bottlecap is angled and the reflection of the light obscures part of the logo. So less-than-perfect photography does not confuse the Vison API. However, it does lower the confidence score.

Text Detection

Recognizing text, handwritten or printed, with Google Cloud Vision API takes a little more work. Call the API using a single method, document_text_detection.

ocr_results = client.document_text_detection(image=image)

To get to the detected text, drill into the features. The full_text_annotation field has a list of pages. Each page has a list of blocks. Each block has a list of paragraphs. A paragraph contains a list of words. Each word has symbols with a text value. Each symbol is essentially a character. Joining the text of the symbols will assemble the word.

for page in ocr_results.full_text_annotation.pages:

for block in page.blocks:

for paragraph in block.paragraphs:

for word in paragraph.words:

print(''.join([symbol.text for symbol in word.symbols]))

This image shows text in different styles.

Source: Unsplash

The text was correctly detected by the API as 'DANGER HARD HAT PROTECTION REQUIRED'. As with many of the previous examples, the bounds of the text features are also detected in the bounding_box, and the confidence value is the confidence score.

Using Remote Images

Each of the examples above could use a remote image instead of a local one. This requires a slight change to the setup. Create an instance of Image without any content.

image = types.Image()

Set the image_uri to the location of the remote image.

image.source.image_uri = 'REMOTE_IMAGE_URL'

Now you can use the image the same as in the previous examples.

Conclusion

The Google Cloud Vision API lets you bring the power of computer vision to your apps. Best of all, you don't need to know anything about computer vision. All you do is call the API or use a client library and consume the data that Google sends you. It's more cost-effective and accurate than any model a small or medium business could create. And since it's from Google, your app is piggybacking on the years of experience that Google has with machine learning. It's free to get started. Thanks for reading!

Advance your tech skills today

Access courses on AI, cloud, data, security, and more—all led by industry experts.