- Course

Beginning Data Exploration and Analysis with Apache Spark

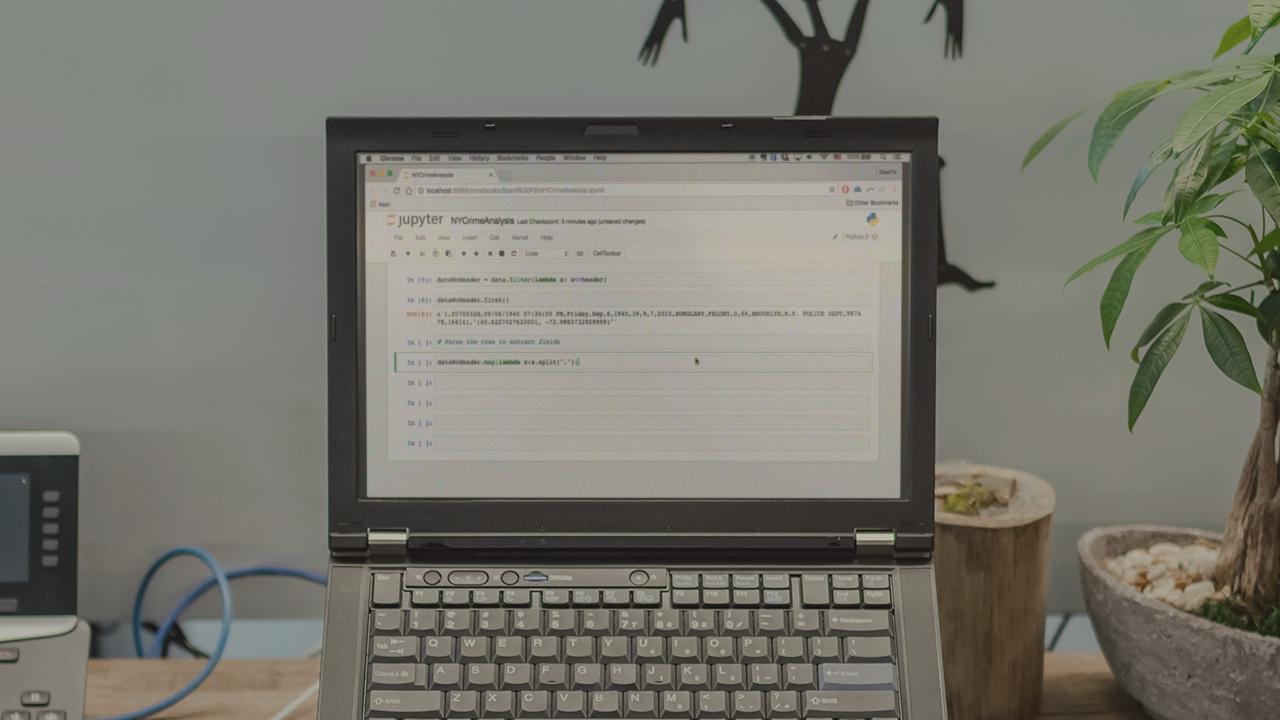

80% of a data scientist's job is data preparation. This course is all about data preparation i.e. cleaning, transforming, summarizing data using Spark.

- Course

Beginning Data Exploration and Analysis with Apache Spark

80% of a data scientist's job is data preparation. This course is all about data preparation i.e. cleaning, transforming, summarizing data using Spark.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Data

What you'll learn

Data preparation is a staple task for any data professional, whether you just want to explore data or develop sophisticated Machine Learning models. Spark is an engine that helps do this in a very intuitive way, using functional constructs that abstract the user from all the messiness of working with large datasets. In this course, Beginning Data Exploration and Analysis with Apache Spark, you'll go through exploratory data analysis and data munging with Spark, step-by-step. First, you'll explore RDDs and functional constructs that make processing in Spark extremely intuitive. Next, you'll discover how to transform and clean unstructured data. Finally, you'll learn how to summarize data along dimensions and how to model relationships to build co-occurrence networks. By the end of this course, you'll be able to use Spark to transform data in any way that you would like.