- Course

Exploring the Apache Spark Structured Streaming API for Processing Streaming Data

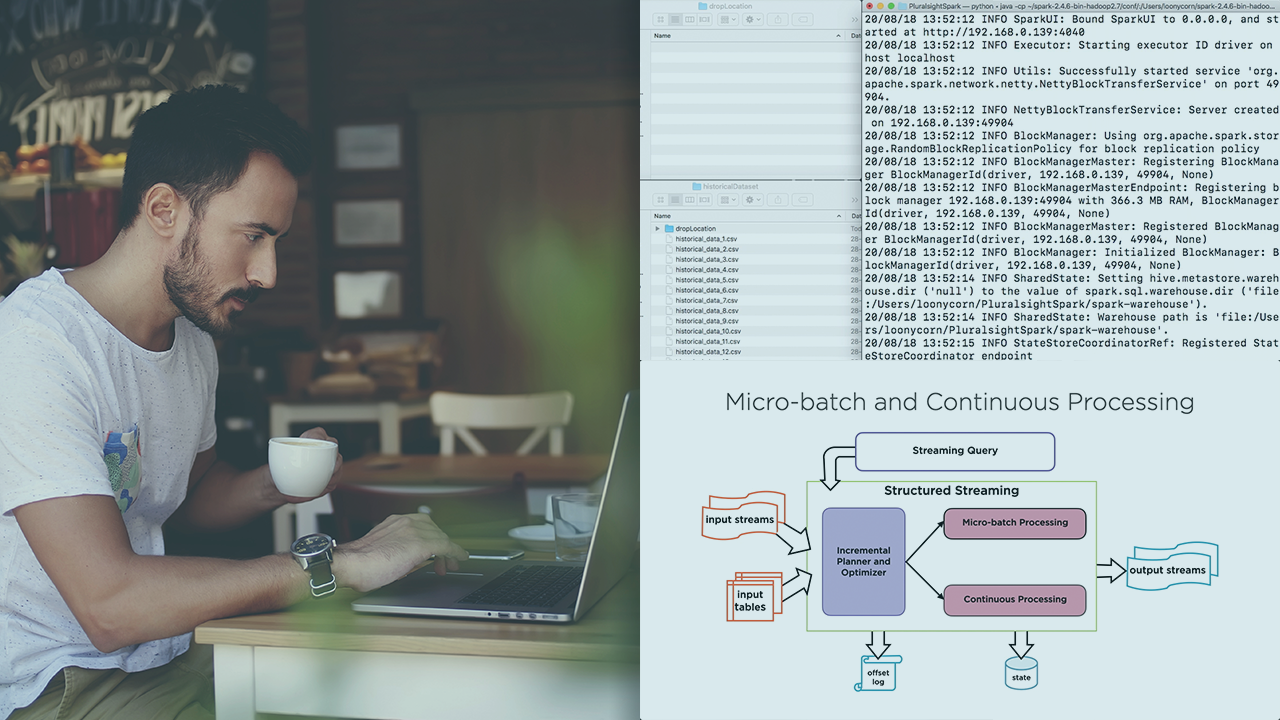

Structured streaming is the scalable and fault-tolerant stream processing engine in Apache Spark 2. Data frames in Spark 2.x support infinite data, thus effectively unifying batch and streaming applications.

- Course

Exploring the Apache Spark Structured Streaming API for Processing Streaming Data

Structured streaming is the scalable and fault-tolerant stream processing engine in Apache Spark 2. Data frames in Spark 2.x support infinite data, thus effectively unifying batch and streaming applications.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Data

What you'll learn

Stream processing applications work with continuously updated data and react to changes in real-time. In this course, Exploring the Apache Spark Structured Streaming API for Processing Streaming Data, you'll focus on using the tabular data frame API as well as Spark SQL to work with streaming, unbounded datasets using the same APIs that work with bounded batch data.

First, you’ll explore Spark’s support for different data sources and data sinks, understand the use case for each and also understand the fault-tolerance semantics that they offer. You’ll write data out to the console and file sinks, and customize your write logic with the foreach and foreachBatch sinks.

Next, you'll see how you can transform streaming data using operations such as selections, projections, grouping, and aggregations using both the DataFrame API as well as Spark SQL. You'll also learn how to perform windowing operations on streams using tumbling and sliding windows. You'll then explore relational join operations between streaming and batch sources and also learn the limitations on streaming joins in Spark.

Finally, you'll explore Spark’s support for managing and monitoring streaming queries using the Spark Web UI and the Spark History server.

When you're finished with this course, you'll have the skills and knowledge to work with different sources and sinks for your streaming data, apply a range of processing operations on input streams, and perform windowing and join operations on streams.

Exploring the Apache Spark Structured Streaming API for Processing Streaming Data

-

Version Check | 15s

-

Prerequisites and Course Outline | 2m 4s

-

Streaming DataFrames in Spark 2.x | 5m 33s

-

Sources and Sinks | 6m 43s

-

Demo: Environment Setup | 1m 48s

-

Demo: Console Sink | 7m 43s

-

Demo: File Sink with CSV and JSON Files | 7m 44s

-

Demo: Foreach Sink | 5m 31s

-

Demo: Foreach Batch Sink | 6m 12s