- Course

Building Features from Numeric Data

This course exhaustively covers data preprocessing techniques and transforms available in scikit-learn, allowing the construction of highly optimized features that are scaled, normalized and transformed in mathematically sound ways to fully harness the power of machine learning techniques.

- Course

Building Features from Numeric Data

This course exhaustively covers data preprocessing techniques and transforms available in scikit-learn, allowing the construction of highly optimized features that are scaled, normalized and transformed in mathematically sound ways to fully harness the power of machine learning techniques.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- AI

- Data

What you'll learn

The quality of preprocessing that numeric data is subjected to is an important determinant of the results of machine learning models built using that data. With smart, optimized data pre-processing, you can significantly speed up model training and validation, saving both time and money, as well as greatly improve model performance in prediction.

In this course, Building Features from Numeric Data, you will gain the ability to design and implement effective, mathematically sound data pre-processing pipelines.

First, you will learn the importance of normalization, standardization and scaling, and understand the intuition and mechanics of tweaking the central tendency as well as dispersion of a data feature.

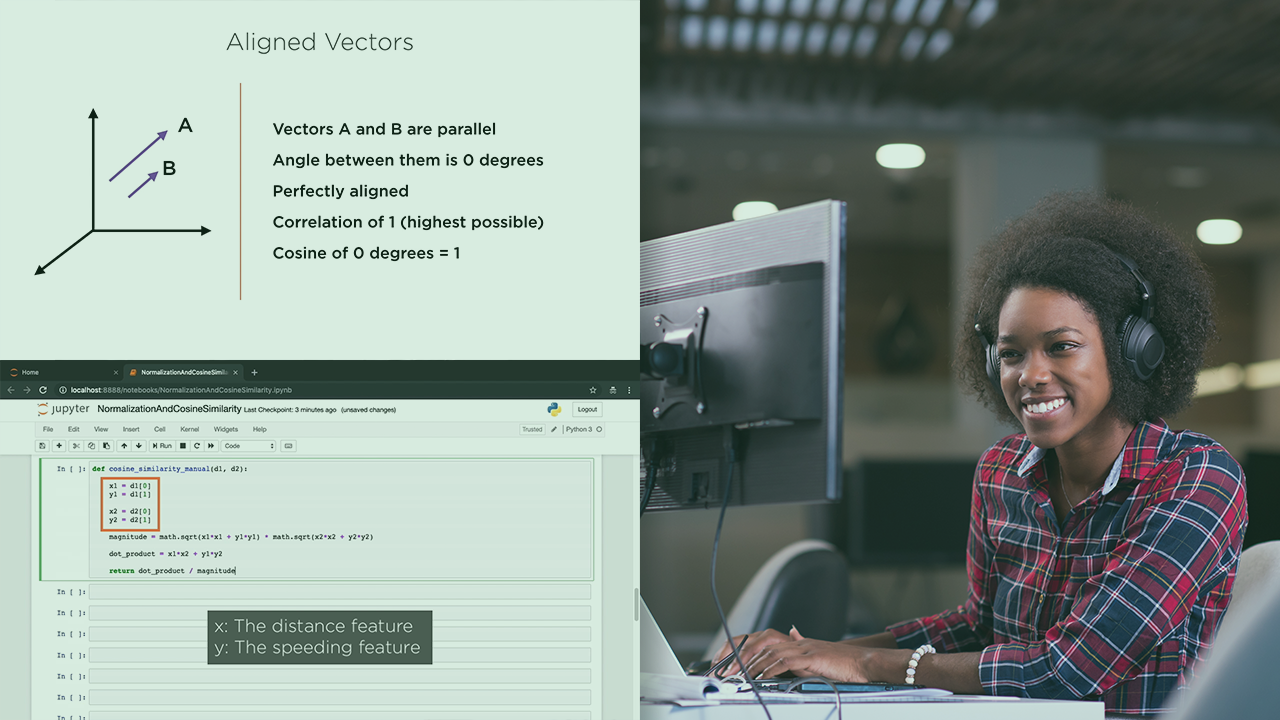

Next, you will discover how to identify and deal with outliers and possibly anomalous data. You will then learn important techniques for scaling and normalization. Such techniques, notably normalization using the L1-norm, L2-norm and Max norm, seek to transform feature vectors to have uniform magnitude. Such techniques find wide usage in ML model building - for instance in computing the cosine similarity of document vectors, and in transforming images before techniques such as convolutional neural networks are applied to them.

You will then move from normalization and standardization to scaling and transforming data. Such transformations include quantization as well as the construction of custom transformers for bespoke use cases. Finally, you will explore how to implement log and power transformations. You will round out the course by comparing the results of three important transformations - the Yeo-Johnson transform, the Box-Cox transform and the quantile transformation - in converting data with non-normal characteristics, such as chi-squared or lognormal data into the familiar bell curve shape that many models work best with.

When you’re finished with this course, you will have the skills and knowledge of data preprocessing and transformation needed to get the best out of your machine learning models.

Building Features from Numeric Data

-

Version Check | 16s

-

Module Overview | 55s

-

Prerequisites and Course Outline | 1m 44s

-

Scaling and Standardization | 4m 9s

-

Mean, Variance, and Standard Deviation | 3m 49s

-

Understanding Variance | 3m 44s

-

Demo: Calculating Mean, Variance, and Standard Deviation | 6m 57s

-

Demo: Box Plot Visualization and Data Standardization | 6m 33s

-

Standard Scaler | 4m 13s

-

Demo: Standardize Data Using the Scale Function | 5m 29s

-

Demo: Standardize Data Using the Standard Scalar Estimator and Apply Bessels Correction | 3m 53s

-

Robust Scaler | 3m 54s

-

Demo: Scaling Data Using the Robust Scaler | 7m 23s

-

Summary | 1m 19s