- Course

Conceptualizing the Processing Model for the GCP Dataflow Service

Dataflow represents a fundamentally different approach to Big Data processing than computing engines such as Spark. Dataflow is serverless and fully-managed, and supports running pipelines designed using Apache Beam APIs.

- Course

Conceptualizing the Processing Model for the GCP Dataflow Service

Dataflow represents a fundamentally different approach to Big Data processing than computing engines such as Spark. Dataflow is serverless and fully-managed, and supports running pipelines designed using Apache Beam APIs.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Cloud

- Data

What you'll learn

Dataflow allows developers to process and transform data using easy, intuitive APIs. Dataflow is built on the Apache Beam architecture and unifies batch as well as stream processing of data. In this course, Conceptualizing the Processing Model for the GCP Dataflow Service, you will be exposed to the full potential of Cloud Dataflow and its innovative programming model.

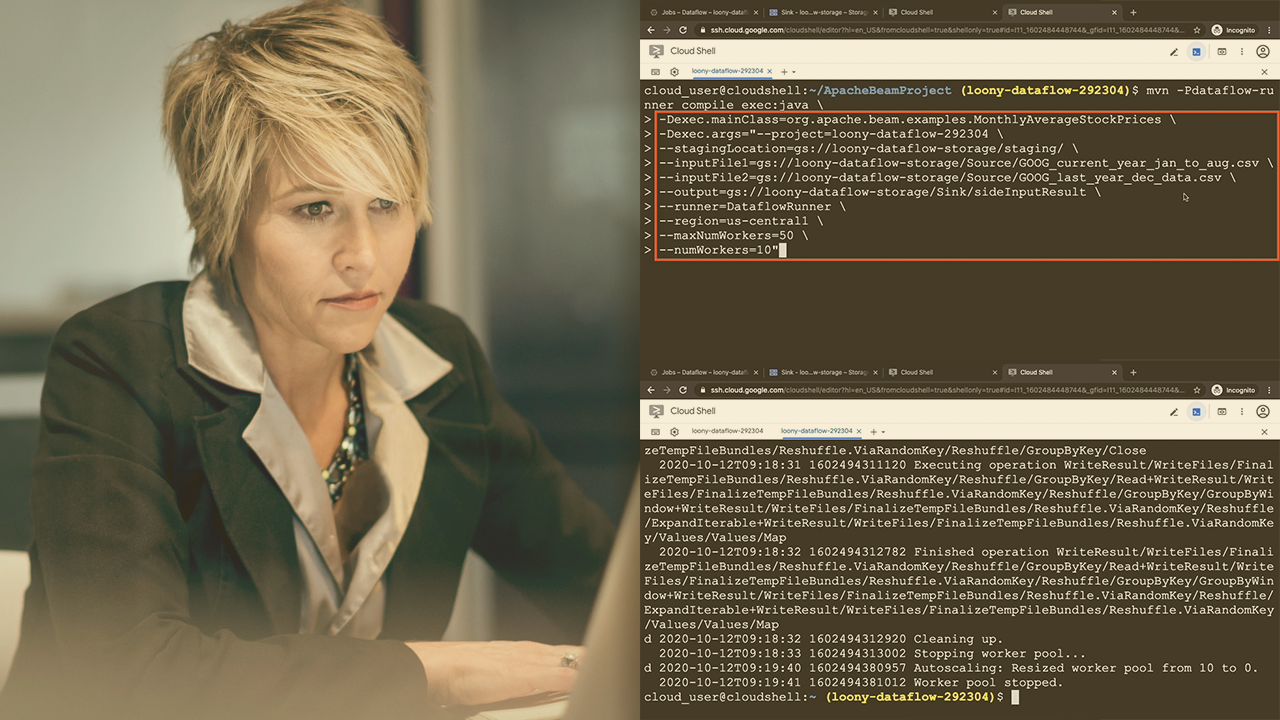

First, you will work with an example Apache Beam pipeline performing stream processing operations and see how it can be executed using the Cloud Dataflow runner.

Next, you will understand the basic optimizations that Dataflow applies to your execution graph such as fusion and combine optimizations.

Finally, you will explore Dataflow pipelines without writing any code at all using built-in templates. You will also see how you can create a custom template to execute your own processing jobs.

When you are finished with this course, you will have the skills and knowledge to design Dataflow pipelines using Apache Beam SDKs, integrate these pipelines with other Google services, and run these pipelines on the Google Cloud Platform.

Conceptualizing the Processing Model for the GCP Dataflow Service

-

Version Check | 15s

-

Prerequisites and Course Outline | 2m 40s

-

Overview of Apache Beam | 3m 52s

-

Introducing Cloud Dataflow | 4m 50s

-

Executing Pipelines on Dataflow | 6m 15s

-

Demo: Enabling APIs | 2m 32s

-

Demo: Setting up a Service Account | 4m 56s

-

Demo: Sample Word Count Application | 7m 3s

-

Demo: Executing the Word Count Application on the Beam Runner | 2m 8s

-

Demo: Creating Cloud Storage Buckets | 3m 9s

-

Demo: Implementing a Beam Pipeline to Run on Dataflow | 3m 43s

-

Demo: Running a Beam Pipeline on Cloud Dataflow | 4m 20s

-

Demo: Custom Pipeline Options | 3m 59s

-

Dataflow Pricing | 4m 22s