- Course

Using the Speech Recognition and Synthesis .NET APIs

This is an introductory course on how to utilize the speech recognition and synthesis APIs in the .NET framework.

- Course

Using the Speech Recognition and Synthesis .NET APIs

This is an introductory course on how to utilize the speech recognition and synthesis APIs in the .NET framework.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Core Tech

What you'll learn

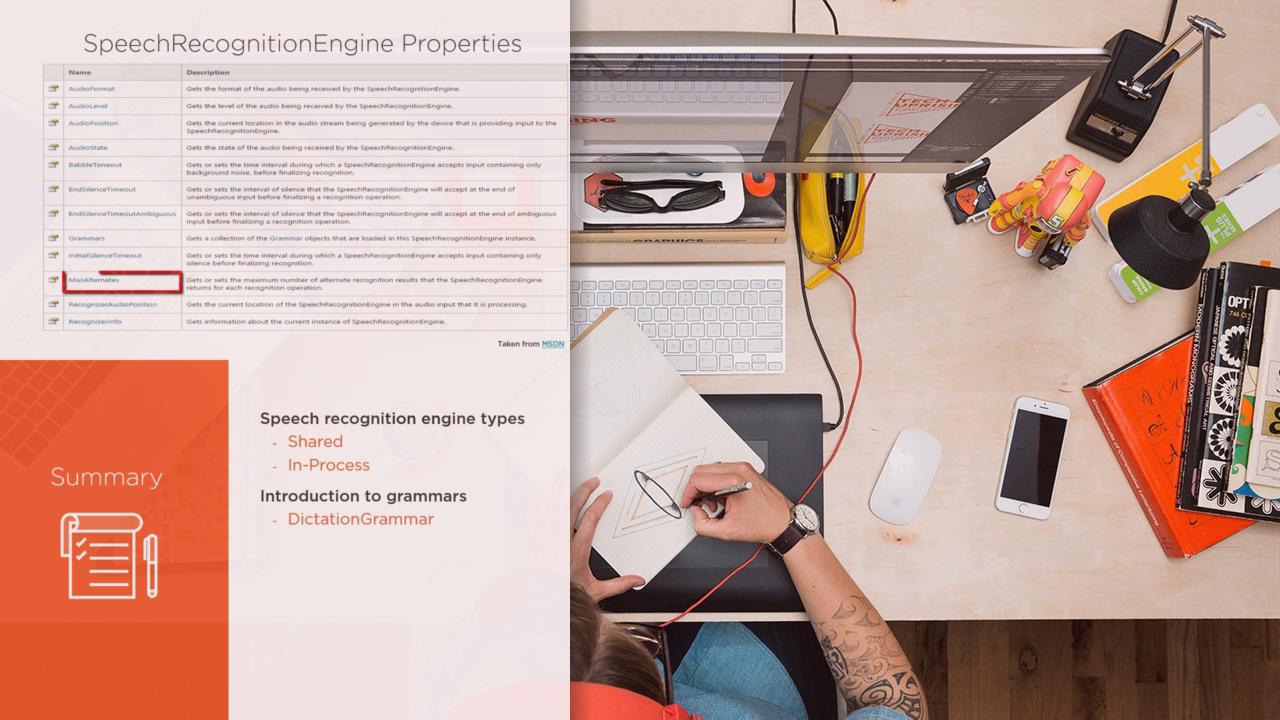

This course introduces the Speech Recognition and Synthesis APIs provided by the .NET framework, which will allow developers to bring new accessibility experiences to their .NET applications. This course will guide developers from getting started with the recognition and synthesis concepts to actually implementing them in C# using a simple WPF application. XML standards, such as Speech Recognition Grammar Specification (SRGS) and Speech Synthesis Markup Language (SSML), will be covered in-depth, as well.