- Course

Enforcing Data Contracts with Kafka Schema Registry

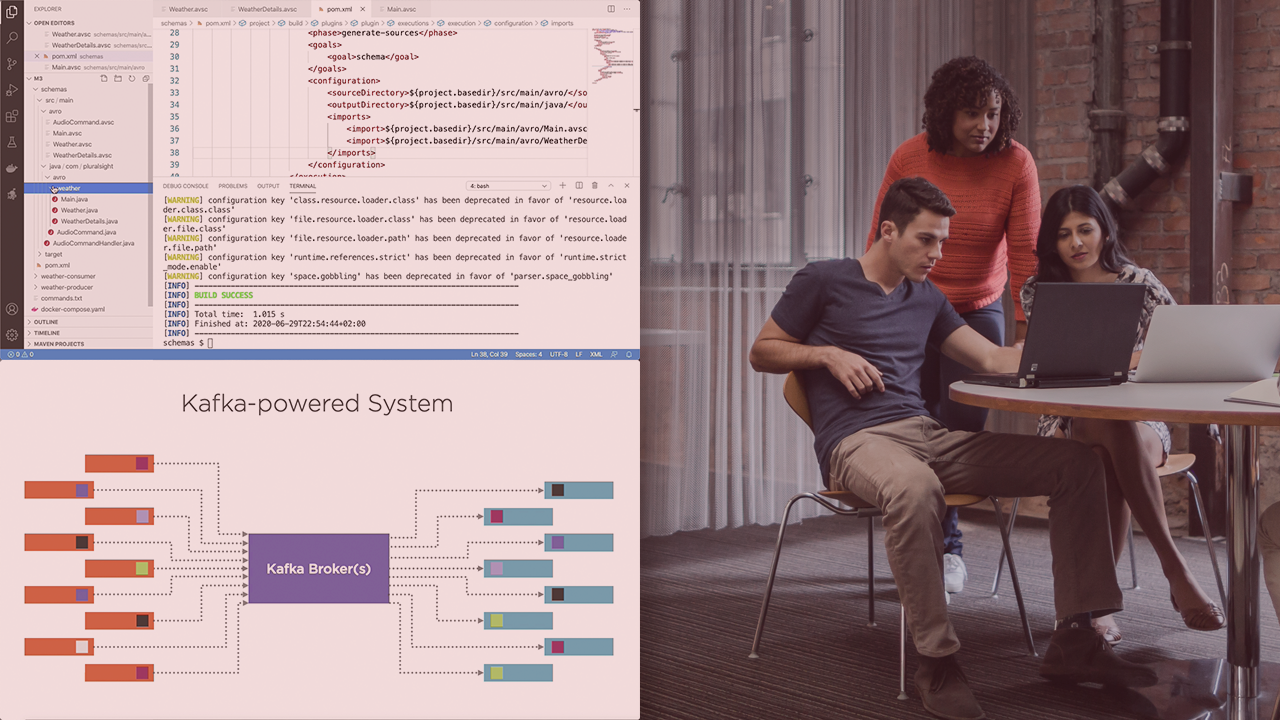

Schema Registry is the easiest solution to introduce data governance to your Kafka topics. This course will teach you how Schema Registry can be used to enforce and manage data contracts in your Apache Kafka-powered system.

- Course

Enforcing Data Contracts with Kafka Schema Registry

Schema Registry is the easiest solution to introduce data governance to your Kafka topics. This course will teach you how Schema Registry can be used to enforce and manage data contracts in your Apache Kafka-powered system.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Data

What you'll learn

In a world of data, governance can become chaotic very quickly. In this course, Enforcing Data Contracts with Kafka Schema Registry, you’ll learn to enforce and manage data contracts in your Apache Kafka-powered system. First, you’ll explore how the serialization process takes place and why AVRO makes such a great option. Next, you’ll discover how to manage data contracts using Schema Registry. Finally, you’ll learn how to use other serialization formats while using Apache Kafka. When you’re finished with this course, you’ll have the skills and knowledge of data governance with Schema Registry needed to enforce and manage data contracts in your Apache Kafka setup.