- Course

Exploring the Apache Flink API for Processing Streaming Data

Flink is a stateful, tolerant, and large scale system which works with bounded and unbounded datasets using the same underlying stream-first architecture.

- Course

Exploring the Apache Flink API for Processing Streaming Data

Flink is a stateful, tolerant, and large scale system which works with bounded and unbounded datasets using the same underlying stream-first architecture.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Data

What you'll learn

Apache Flink is built on the concept of stream-first architecture where the stream is the source of truth. In this course, Exploring the Apache Flink API for Processing Streaming Data, you will perform custom transformations and windowing operations on streaming data.

First, you will explore different stateless and stateful transformations that Flink supports for data streams such as map, flat map, and filter transformations.

Next, you will learn the use of the process function and the keyed process function which allows you to perform very granular operations on input streams, get access to operator state, and access timer services.

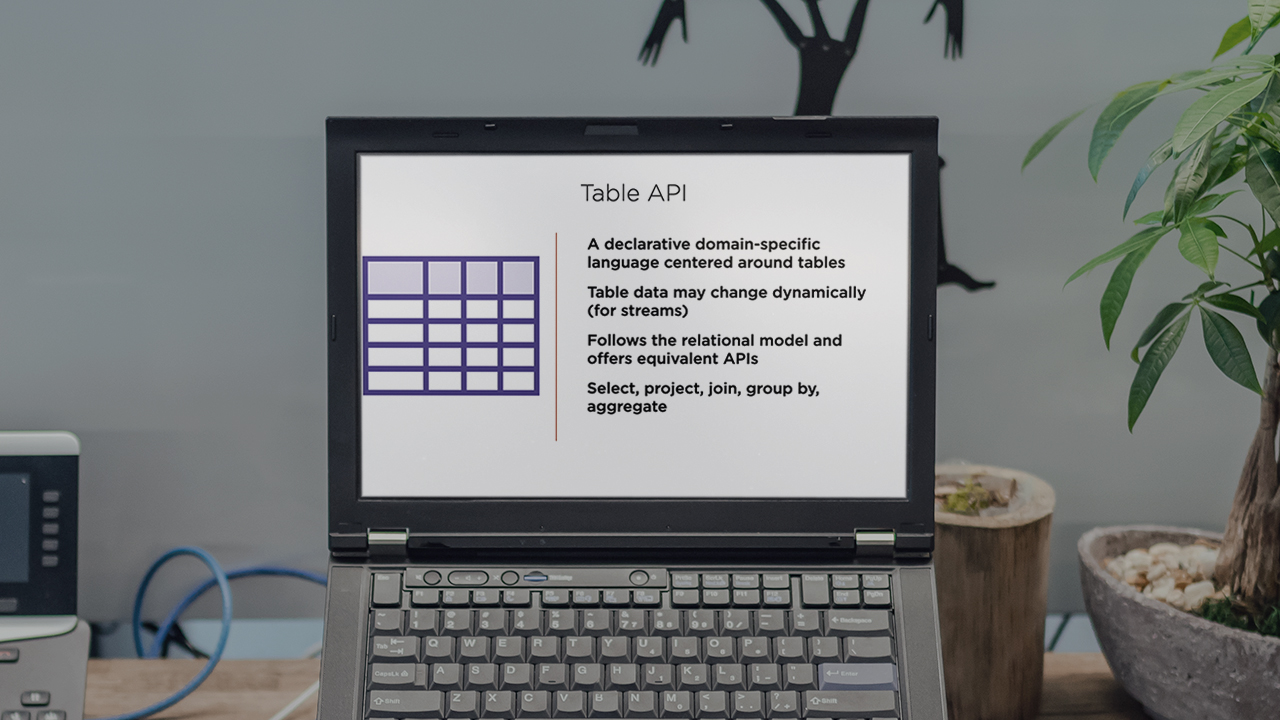

Finally, you will round off your knowledge of the Flink APIs by performing transformations using the table API as well as SQL queries.

When you are finished with this course you will have the skills and knowledge to design Flink pipelines, access state and timers in Flink, perform windowing and join operations, and run SQL queries on input streams.

Exploring the Apache Flink API for Processing Streaming Data

-

Version Check | 15s

-

Prerequisites and Course Outline | 2m 53s

-

Flink APIs for Stream Processing | 3m 48s

-

Data Stream Connectors | 2m 27s

-

Demo: Environment and Maven Project Setup | 5m 56s

-

Demo: Configuring Dependencies in IntelliJ | 3m 8s

-

Demo: Map and Filter Transformations | 4m 38s

-

Demo: Streaming File Sink | 2m 31s

-

Demo: Running Flink Applications on the Local Flink Cluster | 5m 10s

-

Demo: Flatmap Transformations | 3m 45s

-

Demo: Keyed Streams and Transforms on Keyed Streams | 5m 59s

-

Demo: Using POJOs in Data Streams | 3m 34s

-

Demo: Connected Streams | 5m 9s

-

Async IO | 5m 18s