- Course

Foundations of PyTorch

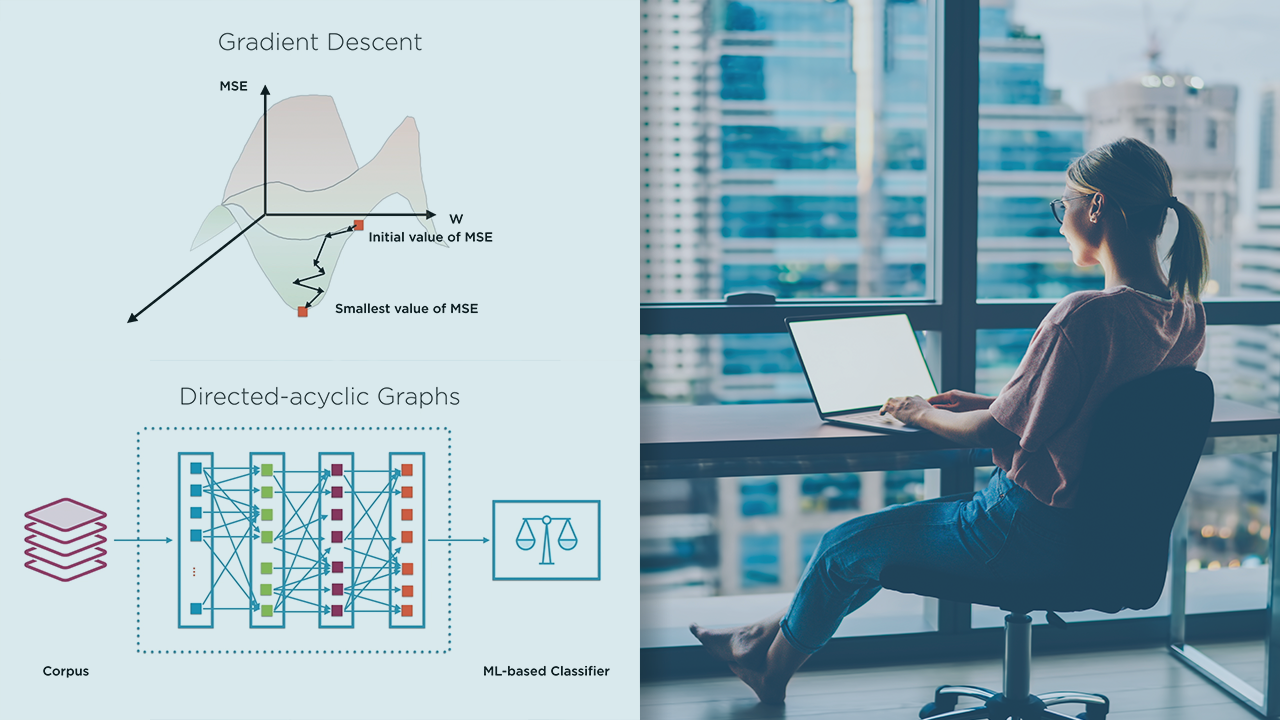

This course covers many aspects of building deep learning models in PyTorch, including neurons and neural networks, and how PyTorch uses differential calculus to train such models and create dynamic computation graphs in deep learning.

- Course

Foundations of PyTorch

This course covers many aspects of building deep learning models in PyTorch, including neurons and neural networks, and how PyTorch uses differential calculus to train such models and create dynamic computation graphs in deep learning.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Data

What you'll learn

PyTorch is fast emerging as a popular choice for building deep learning models owing to its flexibility, ease-of-use and built-in support for optimized hardware such as GPUs. Using PyTorch, you can build complex deep learning models, while still using Python-native support for debugging and visualization. In this course, Foundations of PyTorch, you will gain the ability to leverage PyTorch support for dynamic computation graphs, and contrast that with other popular frameworks such as TensorFlow. First, you will learn the internals of neurons and neural networks, and see how activation functions, affine transformations, and layers come together inside a deep learning model. Next, you will discover how such a model is trained, that is, how the best values of model parameters are estimated. You will then see how gradient descent optimization is smartly implemented to optimize this process. You will understand the different types of differentiation that could be used in this process, and how PyTorch uses Autograd to implement reverse-mode auto-differentiation. You will work with different PyTorch constructs such as Tensors, Variables, and Gradients. Finally, you will explore how to build dynamic computation graphs in PyTorch. You will round out the course by contrasting this with the approaches used in TensorFlow, another leading deep learning framework which previously offered only static computation graphs, but has recently added support for dynamic computation graphs. When you’re finished with this course, you will have the skills and knowledge to move on to building deep learning models in PyTorch and harness the power of dynamic computation graphs.

Foundations of PyTorch

-

Version Check | 16s

-

Module Overview | 59s

-

Prerequisites and Course Outline | 2m

-

Representation Learning Using Neural Networks | 6m 45s

-

Neuron as a Mathematical Function | 5m 56s

-

Activation Functions | 5m 14s

-

Introducing PyTorch | 4m 38s

-

TensorFlow and PyTorch | 4m 46s

-

Demo: PyTorch Install and Setup | 5m 3s

-

Summary | 1m 7s