- Course

Getting Started with Delta Lake on Databricks

This course will teach you how you can create, ingest data into, and work with Delta Lakes, an open-source storage layer that brings reliability to data stored in data lakes. Delta Lakes offer ACID transactions, unified batch and stream processing.

- Course

Getting Started with Delta Lake on Databricks

This course will teach you how you can create, ingest data into, and work with Delta Lakes, an open-source storage layer that brings reliability to data stored in data lakes. Delta Lakes offer ACID transactions, unified batch and stream processing.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Data

What you'll learn

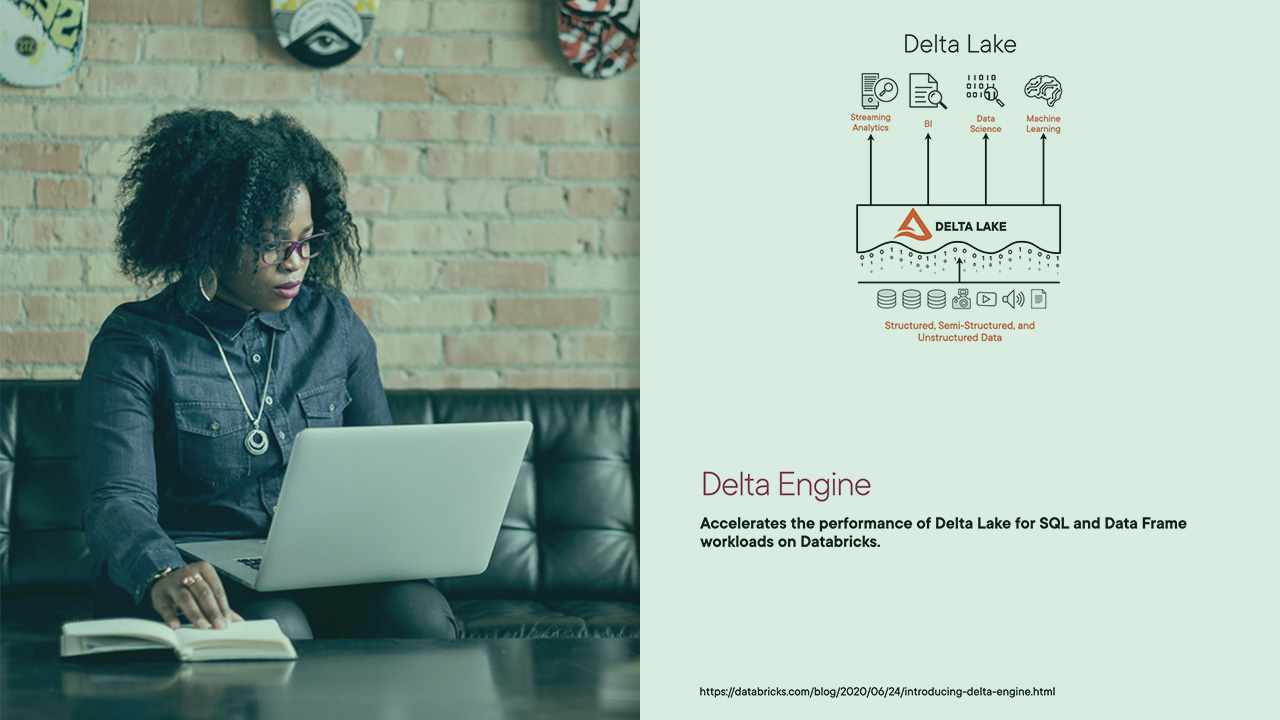

The Databricks Data Lakehouse architecture is an innovative paradigm that combines the flexibility and low-cost storage offered by data lakes with the features and capabilities of a data warehouse. The lakehouse architecture achieves this by using metadata, indexing, and caching layer on top of data lake storage. This open-source storage layer is Delta Lake. This Delta Lake storage layer lies at the heart of Databricks’ lakehouse architecture.

In this course, Getting Started with Delta Lake on Databricks you will learn how exactly Delta Lakes supports transactions on cloud storage. First, you will learn the basic elements of Delta Lake namely Delta files, Delta tables, DeltaLog, and Delta optimizations.

Next, you will discover how you can get better performance from queries that you run on Delta tables using different optimizations. Here you will explore Delta caching, data skipping, and file layout optimizations such as partitioning, bin-packing, and z-order clustering.

Finally, you will explore how you can ingest data from external sources into Delta tables using batch and streaming ingestion. You will use the COPY INTO command for batch ingestion and the Databricks Auto Loader for stream ingestion.

When you are finished with this course, you will have the skills and ability to create, and ingest data into Delta Lakes and run optimal queries to extract insights.