- Course

Architecting Big Data Solutions Using Google Bigtable

Google Bigtable is a sophisticated NoSQL offering on the Google Cloud Platform with extremely low latencies. By the end of this course, you'll understand why Bigtable is much more powerful offering than HBase, with linear scaling of your data.

- Course

Architecting Big Data Solutions Using Google Bigtable

Google Bigtable is a sophisticated NoSQL offering on the Google Cloud Platform with extremely low latencies. By the end of this course, you'll understand why Bigtable is much more powerful offering than HBase, with linear scaling of your data.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Cloud

- Data

What you'll learn

Bigtable is Google’s proprietary storage service that offers extremely fast read and write speeds. It uses a sophisticated internal architecture which learns access patterns and moves around your data to mitigate the issue of hot-spotting.

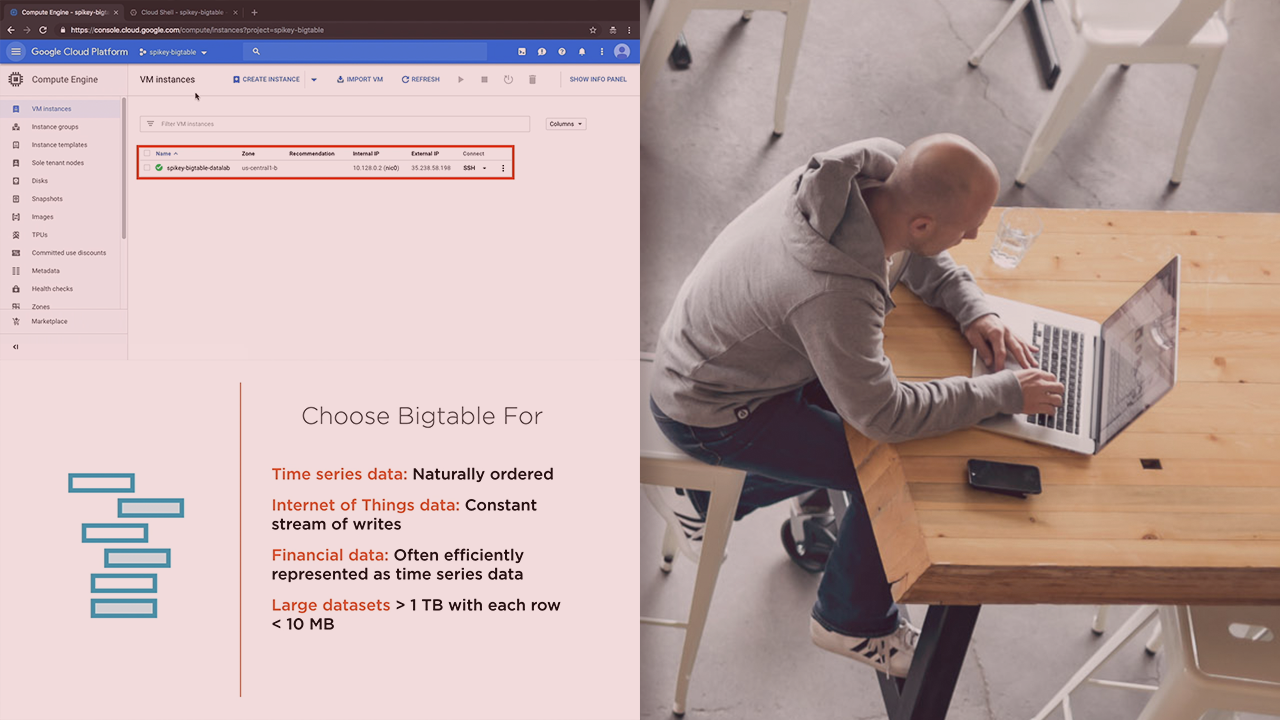

In this course, Architecting Big Data Solutions Using Google Bigtable, you’ll learn both the conceptual and practical aspects of working with Bigtable. You’ll learn how to best to design your schema to enable fast reads and write speeds and discover how data in Bigtable can be accessed using the command line as well as client libraries.

First, you’ll study the internal architecture of Bigtable and how data is stored within it using the 4-dimensional data model. You’ll also discover how Bigtable clusters, nodes, and instances work and how Bigtable works with Colossus - Google’s proprietary storage system behind the scenes.

Next, you’ll access Bigtable using both the HBase shell as well as cbt, Google’s command line utility. Later, you'll create and manage tables while practice exporting and importing data using sequence files.

Finally, you’ll study how manual fail-overs can be handled when we have single cluster routing enabled.

At the end of this course, you’ll be comfortable working with Bigtable using both the command line as well as client libraries.

Architecting Big Data Solutions Using Google Bigtable

-

Module Overview | 1m 36s

-

Prerequisites and Course Outline | 3m 7s

-

Introducing Bigtable | 7m 1s

-

Bigtable vs. Other GCP Services | 6m 25s

-

Storage Model | 7m 17s

-

Instances, Clusters, Nodes, and Tablets | 3m 2s

-

Replication | 5m 57s

-

Schema Design | 6m 1s

-

Understanding Performance | 3m 26s

-

Pricing | 1m 18s

-

Enabling Bigtable APIs | 2m 14s

-

Creating a Bigtable Instance Using the Web Console | 3m 42s

-

Editing a Bigtable Instance | 2m 36s

-

Creating a Bigtable Instance Using the Command Line | 3m 41s