- Course

Architecting Stream Processing Solutions Using Google Cloud Pub/Sub

This course is about working with Pub/Sub, including creating and managing topics, subscriptions, and publishing applications. Both push and pull subscriptions are explored, as are advanced features such as seeking to timestamps and snapshots.

- Course

Architecting Stream Processing Solutions Using Google Cloud Pub/Sub

This course is about working with Pub/Sub, including creating and managing topics, subscriptions, and publishing applications. Both push and pull subscriptions are explored, as are advanced features such as seeking to timestamps and snapshots.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Data

What you'll learn

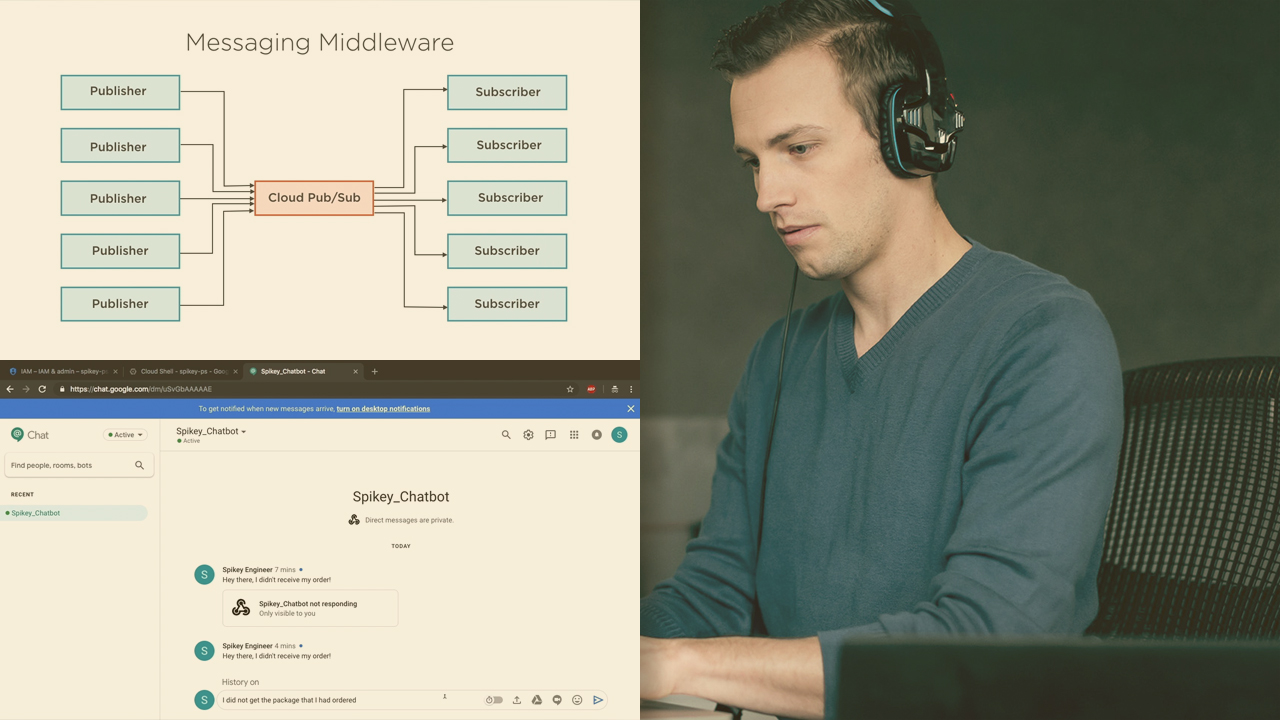

As data warehousing and analytics become more and more integrated into the business models of companies, the need for real-time analytics and data processing has grown. Stream processing has quickly gone from being nice-to-have to must-have. In this course, Architecting Stream Processing Solutions Using Google Cloud Pub/Sub, you will gain the ability to ingest and process streaming data on the Google Cloud Platform, including the ability to take snapshots and replay messages. First, you will learn the basics of a Publisher-Subscriber architecture. Publishers are apps that send out messages, these messages are organized into Topics. Topics are associated with Subscriptions, and Subscribers need to listen in on subscriptions. Each subscription is a message queue, and messages are held in that queue until at least one subscriber per subscription has acknowledged the message. This is why Pub/Sub is said to be a reliable messaging system. Next, you will discover how to create topics, as well as how to push and pull subscriptions. As their names would suggest, push and pull subscriptions differ in who controls the delivery of messages to the subscriber. Finally, you will explore how to leverage advanced features of Pub/Sub such as creating snapshots, and seeking to a specific timestamp, either in the past or in the future. You will also learn the precise semantics of creating snapshots and the implications of turning on the “retain acknowledged messages” option on a subscription. When you’re finished with this course, you will have the skills and knowledge of Google Cloud Pub/Sub needed to effectively and reliably process streaming data on the GCP.