- Course

Architecting Big Data Solutions Using Google Dataproc

Dataproc is Google’s managed Hadoop offering on the cloud. This course teaches you how the separation of storage and compute allows you to utilize clusters more efficiently purely for processing data and not for storage.

- Course

Architecting Big Data Solutions Using Google Dataproc

Dataproc is Google’s managed Hadoop offering on the cloud. This course teaches you how the separation of storage and compute allows you to utilize clusters more efficiently purely for processing data and not for storage.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Cloud

What you'll learn

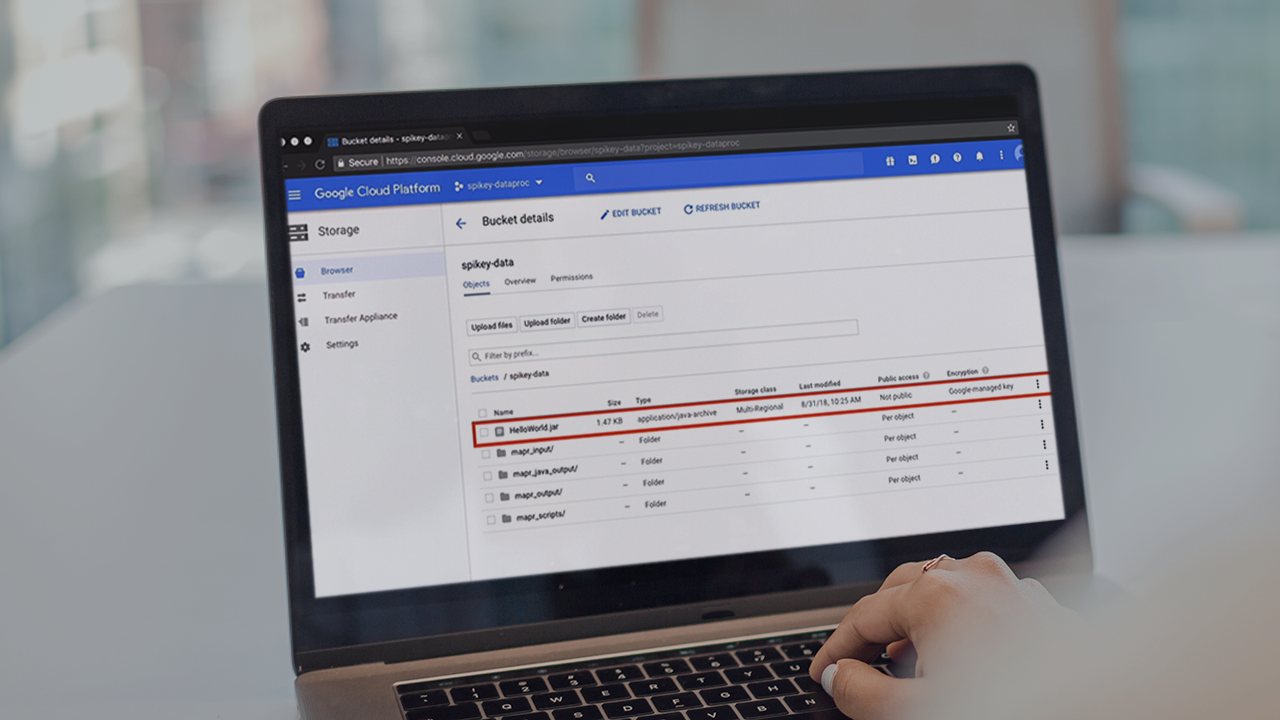

When organizations plan their move to the Google Cloud Platform, Dataproc offers the same features but with additional powerful paradigms such as separation of compute and storage. Dataproc allows you to lift-and-shift your Hadoop processing jobs to the cloud and store your data separately on Cloud Storage buckets, thus effectively eliminating the requirement to keep your clusters always running. In this course, Architecting Big Data Solutions Using Google Dataproc, you’ll learn to work with managed Hadoop on the Google Cloud and the best practices to follow for migrating your on-premise jobs to Dataproc clusters. First, you'll delve into creating a Dataproc cluster and configuring firewall rules to enable you to access the cluster manager UI from your local machine. Next, you'll discover how to use the Spark distributed analytics engine on your Dataproc cluster. Then, you'll explore how to write code in order to integrate your Spark jobs with BigQuery and Cloud Storage buckets using connectors. Finally, you'll learn how to use your Dataproc cluster to perform extract, transform, and load operations using Pig as a scripting language and work with Hive tables. By the end of this course, you'll have the necessary knowledge to work with Google’s managed Hadoop offering and have a sound idea of how to migrate jobs and data on your on-premise Hadoop cluster to the Google Cloud.

Architecting Big Data Solutions Using Google Dataproc

-

Module Overview | 1m 46s

-

Prerequisites, Course Outline, and Spikey Sales Scenarios | 4m 3s

-

Distributed Processing | 2m 57s

-

Storage in Traditional Hadoop | 3m 3s

-

Compute in Traditional Hadoop | 4m 19s

-

Separating Storage and Compute with Dataproc | 6m 22s

-

Hadoop vs. Dataproc | 3m 34s

-

Using the Cloud Shell, Enabling the Dataproc API | 3m 48s

-

Dataproc Features | 3m 56s

-

Migrating to Dataproc | 5m 50s

-

Dataproc Pricing | 3m