- Course

Getting Started with Google Play Services

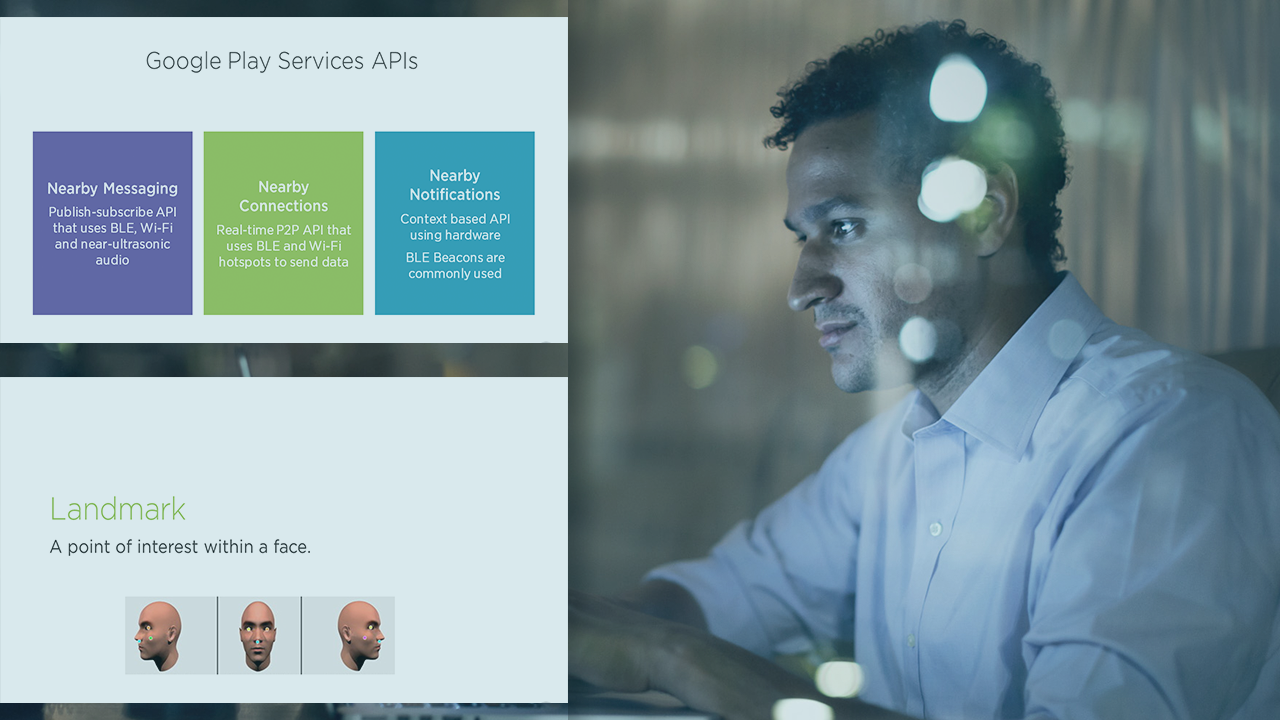

The Google Play Services API provides a breadth of tools to help build out great features natively in Android Applications. This course will introduce you to the basics of implementing two of the these features: Nearby Messaging and Mobile Vision.

- Course

Getting Started with Google Play Services

The Google Play Services API provides a breadth of tools to help build out great features natively in Android Applications. This course will introduce you to the basics of implementing two of the these features: Nearby Messaging and Mobile Vision.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Core Tech

What you'll learn

In the past, developers had to rely upon third party, online services to offer features such as Text Detection or Barcode Scanning in their apps. In this course, Getting Started with the Google Play Services API, you’ll be introduced to these features and learn how to integrate them into your apps. First, you'll learn how to implement Nearby Messaging natively in your apps and be introduced to the code needed to send messages between Android apps that are within close proximity of each other. Then, you'll be introduced to Mobile Vision. Finally, you'll explore how to use three features available to us in the Google Play Services API: Text Detection, Barcode Detection, and Face Detection. When you’re finished with this course you’ll have the fundamental knowledge needed to offer these advanced features in the apps you build or in apps you’ve built already.