- Course

Handling Streaming Data with GCP Dataflow

Dataflow is a serverless, fully-managed service on the Google Cloud Platform for batch and stream processing.

- Course

Handling Streaming Data with GCP Dataflow

Dataflow is a serverless, fully-managed service on the Google Cloud Platform for batch and stream processing.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Cloud

- Data

What you'll learn

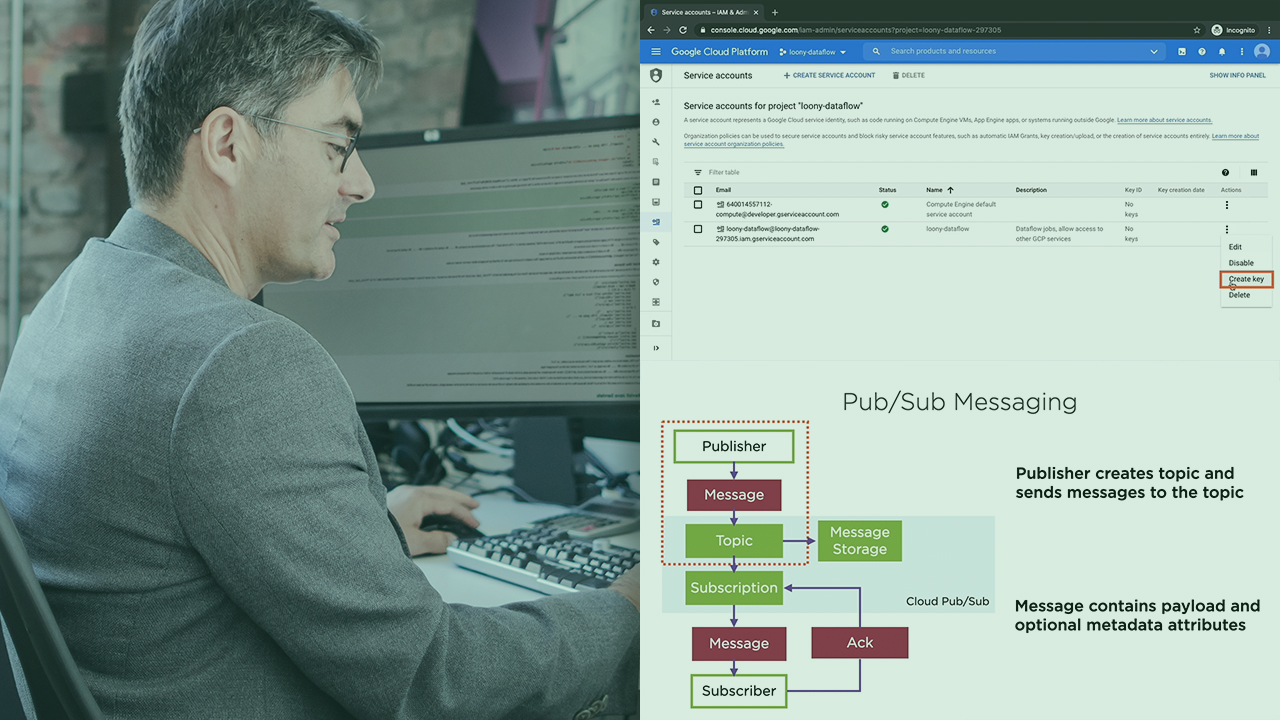

Dataflow allows developers to process and transform data using easy, intuitive APIs. Dataflow is built on the Apache Beam architecture and unifies batch as well as stream processing of data. In this course, Handling Streaming Data with GCP Dataflow, you will discover the GCP provides a wide range of connectors to integrate the Dataflow service with other GCP services such as the Pub/Sub messaging service and the BigQuery data warehouse.

First, you will see how you can integrate your Dataflow pipelines with other services to use as a source of streaming data or as a sink for your final results.

Next, you will stream live Twitter feeds to the Pub/Sub messaging service and implement your pipeline to read and process these Twitter messages. Finally, you will implement pipelines with a side input, and branching pipelines to write your final results to multiple sinks. When you are finished with this course you will have the skills and knowledge to design complex Dataflow pipelines, integrate these pipelines with other Google services, and test and run these pipelines on the Google Cloud Platform.

Handling Streaming Data with GCP Dataflow

-

Version Check | 15s

-

Prerequisites and Course Outline | 3m 38s

-

Demo: Enabling APIs on the GCP | 2m 38s

-

Demo: Creating a Service Account | 4m 30s

-

Demo: Creating an Apache Maven Project | 5m 11s

-

Demo: Uploading Data to a Cloud Storage Bucket | 3m 10s

-

Demo: Implementing a Dataflow Pipeline for Batch Data | 5m 8s

-

Demo: Executing a Dataflow Pipeline | 4m 54s

-

Demo: Viewing Final Results | 1m 28s

-

Demo: Custom Pipeline Options | 5m 41s

-

Demo: Implementing a Pipeline to Read from Pub/Sub | 5m 47s

-

Demo: Executing a Streaming Dataflow Pipeline | 4m 49s

-

Demo: Debugging Slow Pipelines | 5m 42s