- Course

Implementing Bootstrap Methods in R

This course focuses on conceptually understanding and robustly implementing various Bootstrap methods in estimation, including correctly using the non-parametric bootstrap, extending the bootstrap method to a Bayesian world, and more.

- Course

Implementing Bootstrap Methods in R

This course focuses on conceptually understanding and robustly implementing various Bootstrap methods in estimation, including correctly using the non-parametric bootstrap, extending the bootstrap method to a Bayesian world, and more.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Data

What you'll learn

Perhaps the most common type of problem in statistics involves estimating some property of the population, and also quantifying how confident you can be in our estimates of that estimate. Indeed, the very name of the field, statistics, derives from the word statistic, which is a property of a sample; using that statistic you wish to estimate the parameter, which is the same property for the population as a whole.

Now if the property you wish to estimate is a simple one - say the mean - and if the population has nice, and known properties - say it is normally distributed - then this problem is often quite easy to solve. But what if you wish to estimate a very complex, arcane property of a population about which you know almost nothing? In this course, Implementing Bootstrap Methods in R, you will explore an almost magical technique known as the bootstrap method, which can be used in exactly such situations.

First, you will learn how the Bootstrap method works and how it basically relies on collecting one sample from the population, and then subsequently re-sampling from that sample - exactly as if that sample were the population itself - but crucially, doing so with replacement. You will learn how the Bootstrap is a non-parametric technique that almost seems like cheating, but in fact, is both theoretically sound as well as practically robust and easy to implement.

Next, you will discover how different variations of the bootstrap approach mitigate specific problems that can arise when using this technique. You will see how the conventional Bootstrap can be tweaked so that it fits into a Bayesian approach that goes one step beyond giving us just confidence intervals and actually yields likelihood estimates. You will also see how the smooth bootstrap is equivalent to the use of a Kernel Density Estimator and helps smooth out outliers from the original sample.

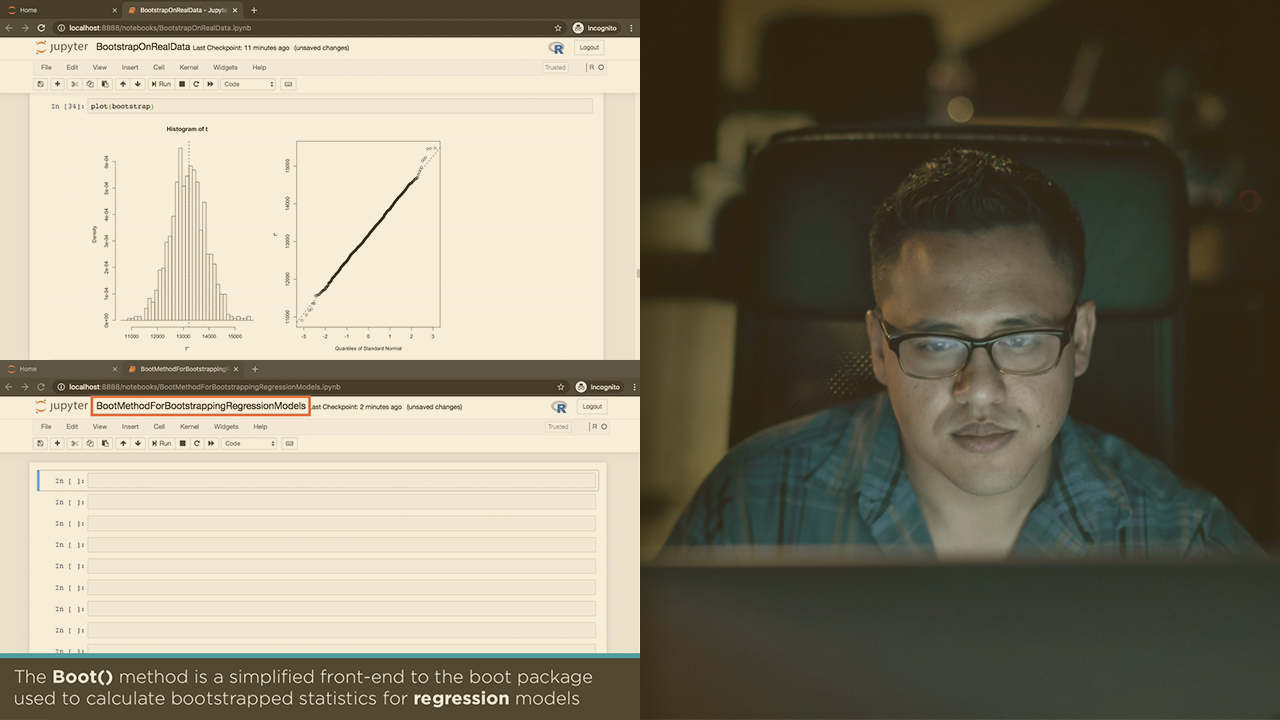

Finally, you will explore how regression problems can be solved using the bootstrap method. You will learn the specific advantages of the bootstrap - for instance in calculating confidence intervals around the R-squared, which is something that is quite difficult to do using conventional parametric methods. You will explore two variants of the bootstrap method in the context of regression - case resampling and residual resampling, and understand the different assumptions underlying these two approaches.

When you’re finished with this course, you will have the skills and knowledge to identify situations where the bootstrap method can be used to estimate population parameters along with appropriate confidence intervals, as well as to implement statistically sound bootstrap algorithms in R.

Implementing Bootstrap Methods in R

-

Version Check | 15s

-

Prerequisites and Course Outline | 2m 29s

-

Sample Statistics and Confidence Intervals | 5m 6s

-

Normally Distributed Data: Estimating Mean | 5m 38s

-

Normally Distributed Data: Calculating Confidence Intervals | 3m 21s

-

Data with Any Distribution: Estimating Mean and Confidence Intervals | 5m 7s

-

Implications of the Central Limit Theorem | 2m 21s

-

Demo: The Central Limit Theorem with Different Distributions | 7m 11s

-

Demo: The Central Limit Theorem on Real Data | 4m 14s

-

Drawbacks of Conventional Approaches | 2m 56s

-

Introducing Bootstrapping | 6m 1s

-

Bootstrapped Confidence Intervals | 7m 33s