- Course

Understanding the MapReduce Programming Model

The MapReduce programming model is the de facto standard for parallel processing of Big Data. This course introduces MapReduce, explains how data flows through a MapReduce program, and guides you through writing your first MapReduce program in Java.

- Course

Understanding the MapReduce Programming Model

The MapReduce programming model is the de facto standard for parallel processing of Big Data. This course introduces MapReduce, explains how data flows through a MapReduce program, and guides you through writing your first MapReduce program in Java.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Data

What you'll learn

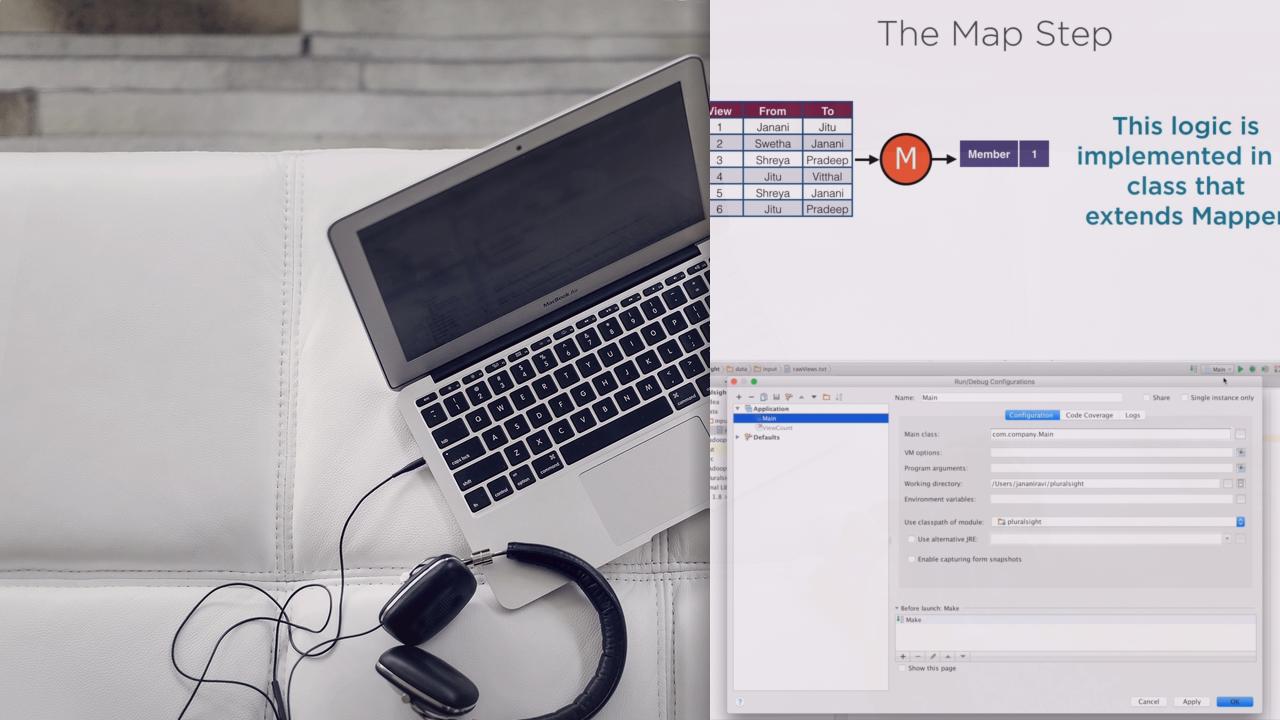

Processing millions of records requires that you first understand the art of breaking down your tasks into parallel processes. The MapReduce programming model, part of the Hadoop eco-system, gives you a framework to define your solution in terms of parallel tasks, which are then combined to give you the final desired result. In this course, Understanding the MapReduce Programming Model, you'll get an introduction to the MapReduce paradigm. First, you'll learn how it helps you visualize how data flows through the map, partition, shuffle, and sort phases before it gets to the reduce phase and gives you the final result. Next, it will guide you through your very first MapReduce program in Java. Finally, you'll learn to extend the framework Mapper and Reducer classes to plug in your own logic and then run this code on your local machine without using a Hadoop cluster. By the end of this course, you will be able to break big data problems into parallel tasks to help tackle large-scale data munging operations.