- Course

Parallel Computing with CUDA

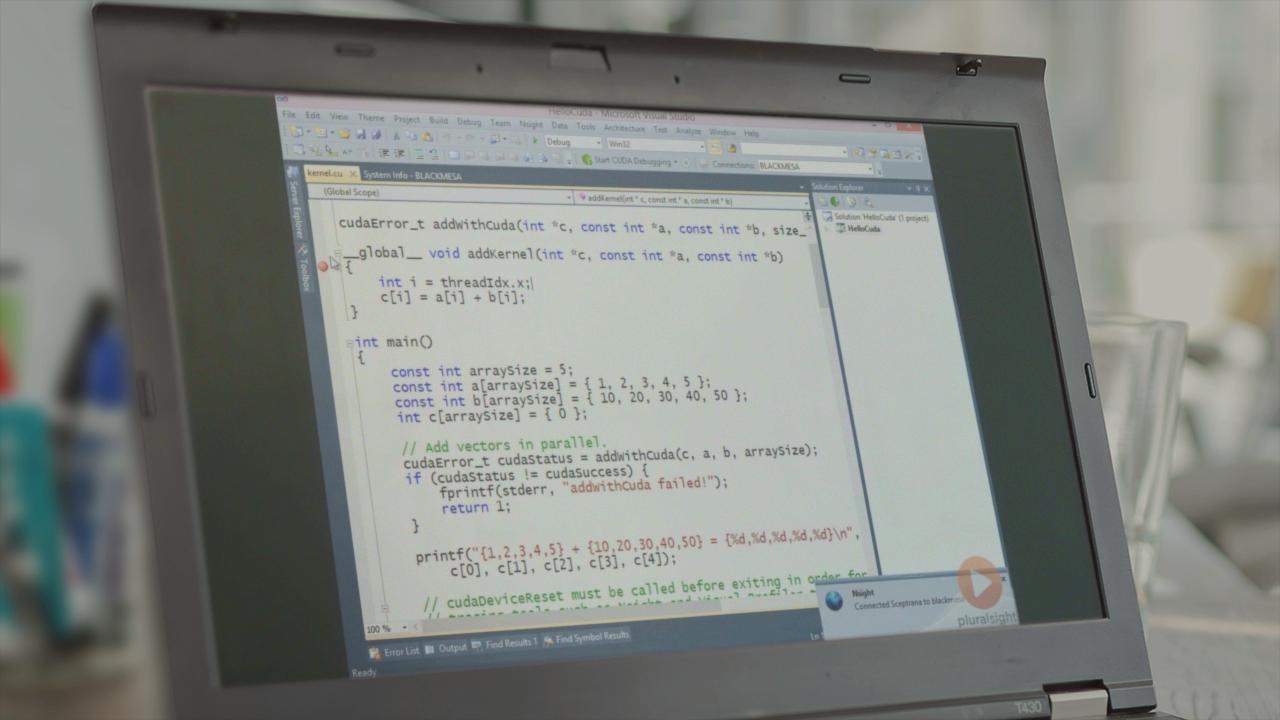

An entry-level course on CUDA - a GPU programming technology from NVIDIA.

- Course

Parallel Computing with CUDA

An entry-level course on CUDA - a GPU programming technology from NVIDIA.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Core Tech

What you'll learn

This introductory course on CUDA shows how to get started with using the CUDA platform and leverage the power of modern NVIDIA GPUs. It covers the basics of CUDA C, explains the architecture of the GPU and presents solutions to some of the common computational problems that are suitable for GPU acceleration.