- Course

Processing Streaming Data Using Apache Flink

Apache Flink is built on the concept of stream-first architecture where the stream is the source of truth.

- Course

Processing Streaming Data Using Apache Flink

Apache Flink is built on the concept of stream-first architecture where the stream is the source of truth.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Data

What you'll learn

Flink is a stateful, tolerant, and large scale system which works with bounded and unbounded datasets using the same underlying stream-first architecture. In this course, Processing Streaming Data Using Apache Flink, you will integrate your Flink applications with real-time Twitter feeds to perform analysis on high-velocity streams.

First, you’ll see how you can set up a standalone Flink cluster using virtual machines on a cloud platform. Next, you will install and work with the Apache Kafka reliable messaging service.

Finally, you will perform a number of transformation operations on Twitter streams, including windowing and join operations.

When you are finished with this course you will have the skills and knowledge to work with high volume and velocity data using Flink and integrate with Apache Kafka to process streaming data.

Processing Streaming Data Using Apache Flink

-

Version Check | 15s

-

Prerequisites and Course Outline | 2m 42s

-

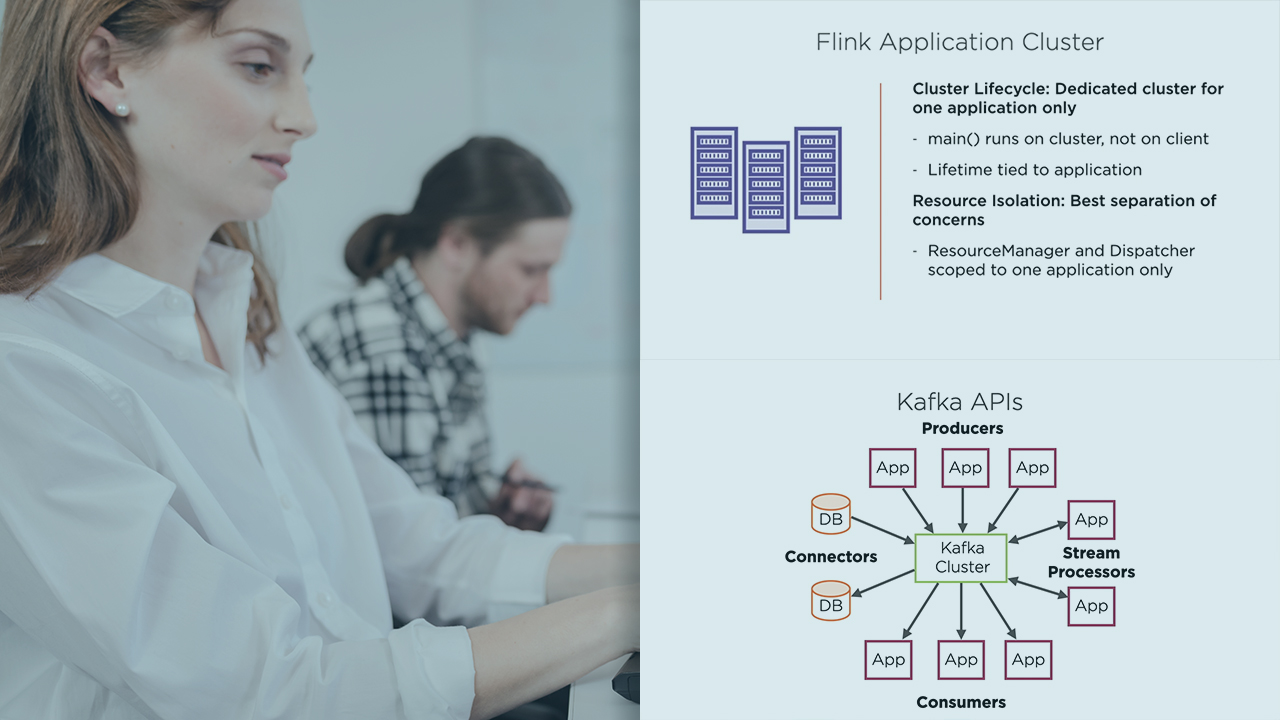

Deployment Modes in Apache Flink | 5m 52s

-

Standalone Cluster | 2m 38s

-

Demo: Provisioning VMs for the Flink Cluster | 4m 19s

-

Demo: Creating Public Private Key Pairs for Passwordless SSH | 5m 22s

-

Demo: Setting up Passwordless SSH | 4m 15s

-

Demo: Install Flink on Cluster Nodes | 3m 14s

-

Demo: Configure Cluster Settings | 4m 54s

-

Demo: Configuring Firewall Rules | 1m 46s

-

Demo: Starting the Job Manager and Task Manager Processes | 2m 20s

-

Demo: Running a Sample Application on the Flink Cluster | 2m 2s

-

Demo: Setting up a Maven Project | 3m 5s

-

Demo: Building a Jar for the Flink Application | 3m 18s

-

Demo: Running a Custom Application on the Flink Cluster | 4m 58s