- Course

Productionalizing Data Pipelines with Apache Airflow 1

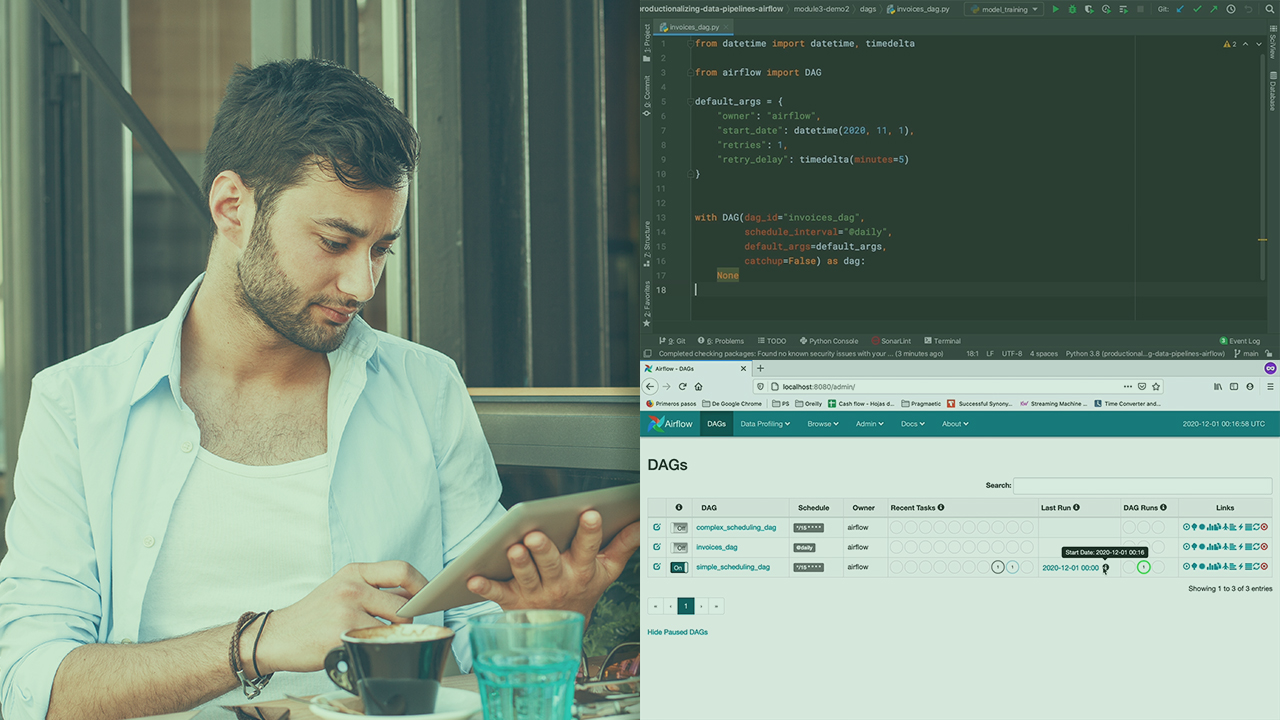

This course will teach you how to master production-grade Data Pipelines with ease within Apache Airflow.

- Course

Productionalizing Data Pipelines with Apache Airflow 1

This course will teach you how to master production-grade Data Pipelines with ease within Apache Airflow.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Data

What you'll learn

Production-grade Data Pipelines are hard to get right. Even when they are done, every update is complex due to its central piece in every organization's infrastructure. In this course, Productionalizaing Data Pipelines with Apache Airflow 1, you’ll learn to master them using Apache Airflow. First, you’ll explore what Airflow is and how it creates Data Pipelines. Next, you’ll discover how to make your pipelines more resilient and predictable. Finally, you’ll learn how to distribute tasks with Celery and Kubernetes Executors. When you’re finished with this course, you’ll have the skills and knowledge of Apache Airflow needed to make any Data Pipelines production grade.