- Course

Scaling scikit-learn Solutions

This course covers the important considerations for scikit-learn models in improving prediction latency and throughput; specific feature representation and partial learning techniques, as well as implementations of incremental learning, out-of-core learning, and multicore parallelism.

- Course

Scaling scikit-learn Solutions

This course covers the important considerations for scikit-learn models in improving prediction latency and throughput; specific feature representation and partial learning techniques, as well as implementations of incremental learning, out-of-core learning, and multicore parallelism.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- AI

- Data

What you'll learn

Even as the number of machine learning frameworks and libraries increases rapidly, scikit-learn is retaining its popularity with ease. scikit-learn makes the common use-cases in machine learning - clustering, classification, dimensionality reduction and regression - incredibly easy.

In this course, Scaling scikit-learn Solutions you will gain the ability to leverage out-of-core learning and multicore parallelism in scikit-learn.

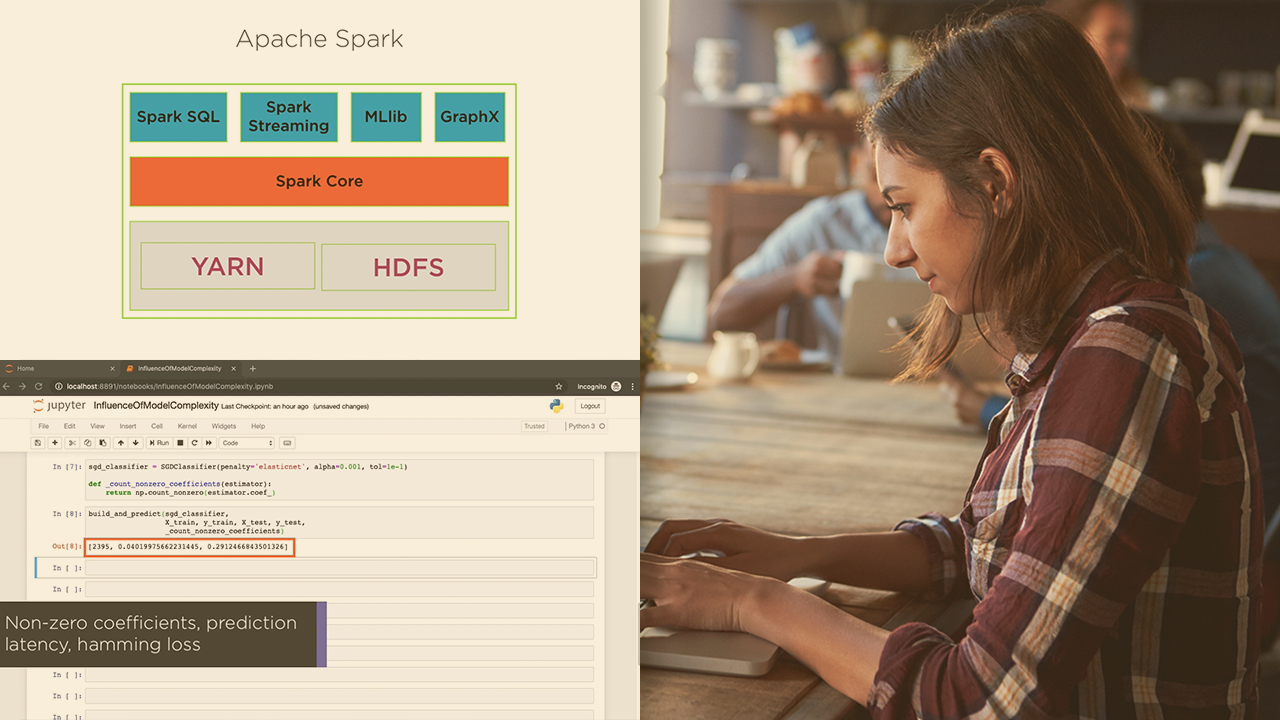

First, you will learn considerations that affect latency and throughput in prediction, including the number of features, feature complexity, and model complexity.

Next, you will discover how smart choices in feature representation and in how you model sparse data can improve the scalability of your models. You will then understand what incremental learning is, and how to use scikit-learn estimators that support this key enabler of out-of-core learning.

Finally, you will round out your knowledge by parallelizing key tasks such as cross-validation, hyperparameter tuning, and ensemble learning.

When you’re finished with this course, you will have the skills and knowledge to identify key techniques to help make your model scalable and implement them appropriately for your use-case.

Scaling scikit-learn Solutions

-

Version Check | 16s

-

Module Overview | 1m 15s

-

Prerequisites and Course Outline | 1m 30s

-

Dimensions of Scaling | 2m 2s

-

Measuring Performance in Scaling | 6m 2s

-

Influence of Number of Features | 5m 6s

-

Influence of Feature Extraction Techniques | 4m 37s

-

Influence of Feature Representation | 2m 50s

-

Demo: Helper Functions to Generate Datasets and Train Models | 4m 43s

-

Demo: Measuring Training Latencies for Different Models | 4m 5s

-

Module Summary | 1m 27s