- Course

Understanding Algorithms for Reinforcement Learning

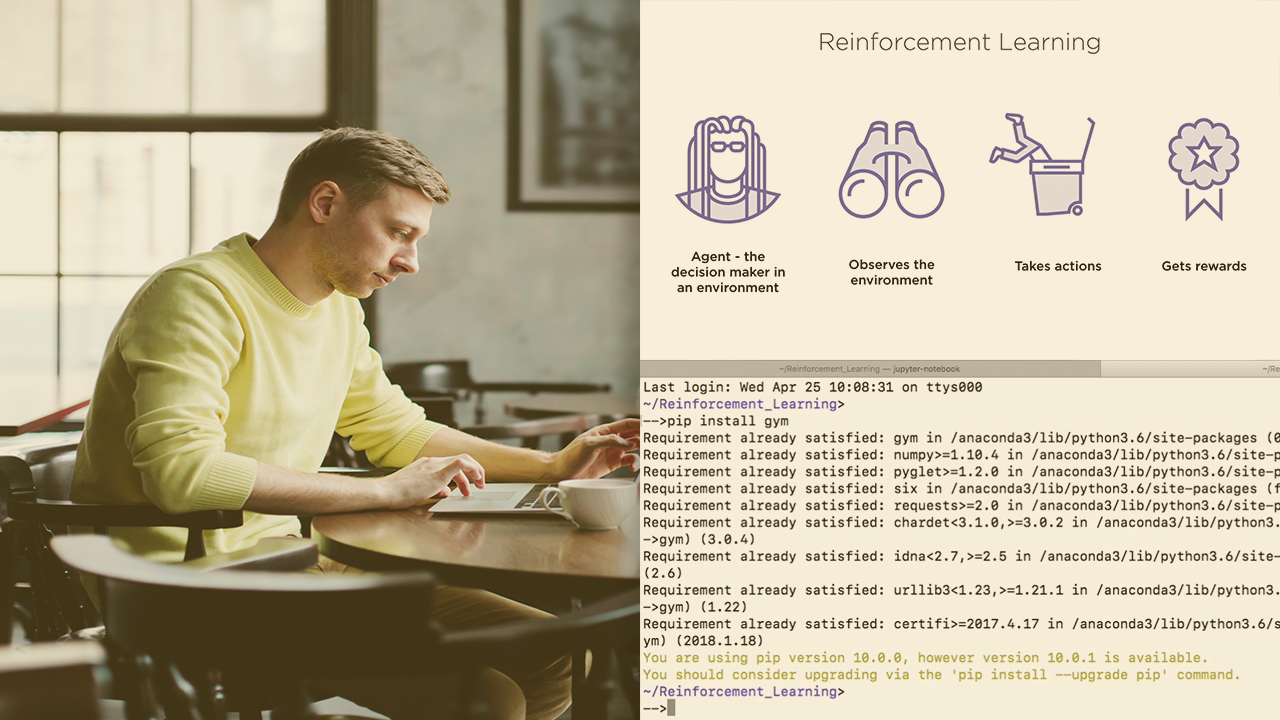

Reinforcement learning is a type of machine learning which allows decision makers to operate in an unknown environment. In the world of self-driving cars and exploring robots, RL is an important field of study for any student of machine learning.

- Course

Understanding Algorithms for Reinforcement Learning

Reinforcement learning is a type of machine learning which allows decision makers to operate in an unknown environment. In the world of self-driving cars and exploring robots, RL is an important field of study for any student of machine learning.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Data

What you'll learn

Traditional machine learning algorithms are used for predictions and classification. Reinforcement learning is about training agents to take decisions to maximize cumulative rewards. In this course, Understanding Algorithms for Reinforcement Learning, you'll learn basic principles of reinforcement learning algorithms, RL taxonomy, and specific policy search techniques such as Q-learning and SARSA. First, you'll discover the objective of reinforcement learning; to find an optimal policy which allows agents to make the right decisions to maximize long-term rewards. You'll study how to model the environment so that RL algorithms are computationally tractable. Next, you'll explore dynamic programming, an important technique used to cache intermediate results which simplify the computation of complex problems. You'll understand and implement policy search techniques such as temporal difference learning (Q-learning) and SARSA which help converge on to an optimal policy for your RL algorithm. Finally, you'll build reinforcement learning platforms which allow study, prototyping, and development of policies, as well as work with both Q-learning and SARSA techniques on OpenAI Gym. By the end of this course, you should have a solid understanding of reinforcement learning techniques, Q-learning and SARSA and be able to implement basic RL algorithms.

Understanding Algorithms for Reinforcement Learning

-

Version Check | 16s

-

Module Overview | 1m 49s

-

Prerequisites and Course Overview | 2m 22s

-

Supervised and Unsupervised Machine Learning Techniques | 5m 11s

-

Introducing Reinforcement Learning | 6m 27s

-

Reinforcement Learning vs. Supervised and Unsupervised Learning | 2m 29s

-

Modeling the Environment as a Markov Decision Process | 6m 37s

-

Reinforcement Learning Applications | 3m 12s

-

Understanding Policy Search | 7m 21s

-

Policy Search Algorithms | 4m 8s