- Course

Building Data Pipelines with Luigi 3 and Python

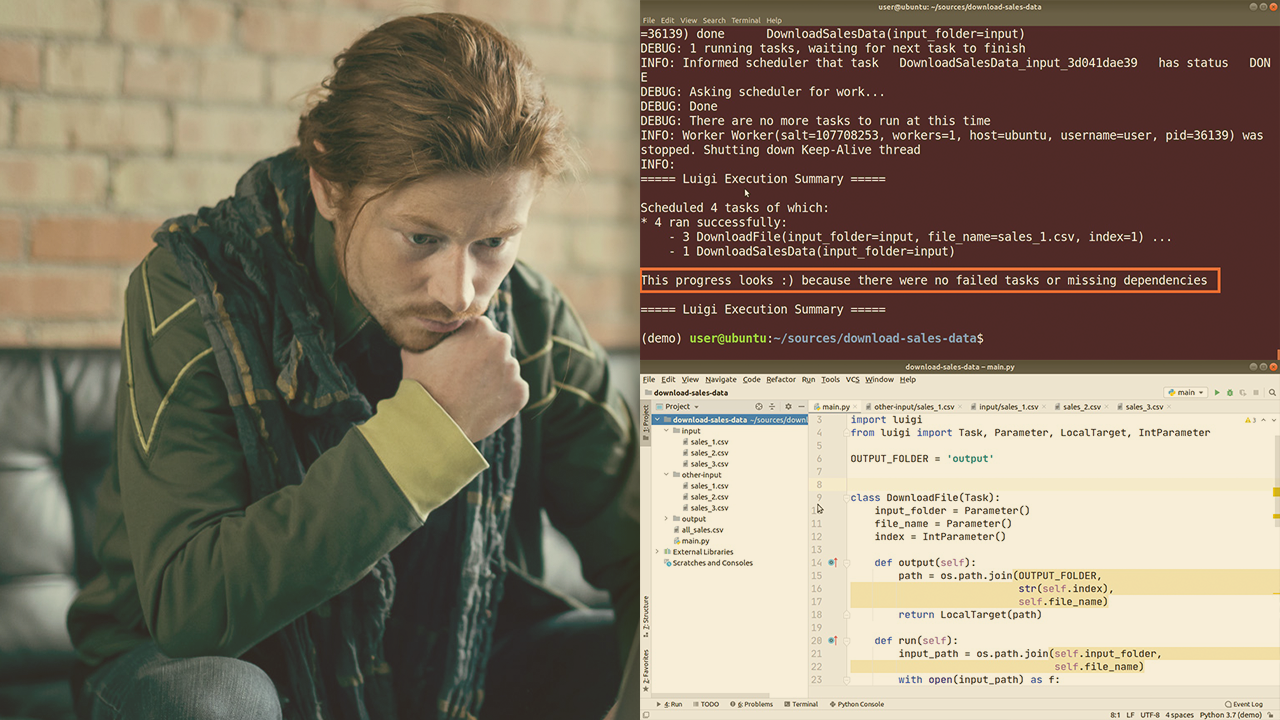

Other developers implement data pipelines by putting together a bunch of hacky scripts, that over time turn into liabilities and maintenance nightmares. Take this course to implement sane and smart data pipelines with Luigi in Python.

- Course

Building Data Pipelines with Luigi 3 and Python

Other developers implement data pipelines by putting together a bunch of hacky scripts, that over time turn into liabilities and maintenance nightmares. Take this course to implement sane and smart data pipelines with Luigi in Python.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Data

What you'll learn

Data arrives from various sources and needs further processing. It's very tempting to re-invent the wheel and write your own library to build data pipelines for batch processing. This results in data pipelines that are difficult to maintain. In this course, Building Data Pipelines with Luigi and Python, you’ll learn how to build data pipelines with Luigi and Python. First, you’ll explore how to build your first data pipelines with Luigi. Next, you’ll discover how to configure Luigi pipelines. Finally, you’ll learn how to run Luigi pipelines. When you’re finished with this course, you’ll have the Luigi skills and knowledge for building data pipelines that are easy to maintain.