- Course

Advanced Integration Services

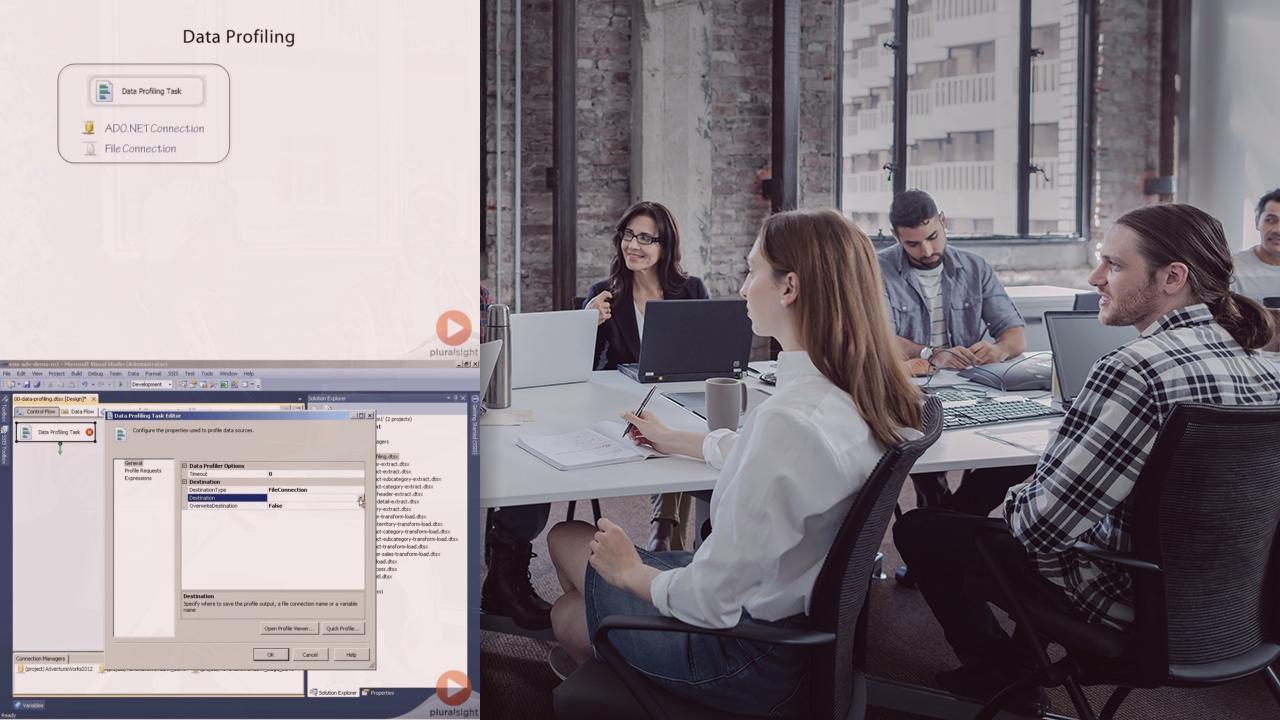

This course teaches how to work with Integration Services packages in the context of a data warehouse development project to perform extract, transform, and load operations.

- Course

Advanced Integration Services

This course teaches how to work with Integration Services packages in the context of a data warehouse development project to perform extract, transform, and load operations.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Data

What you'll learn

This course explains how to apply Integration Services features to build packages that support the extract, transform, and load operations of a data warehouse. It covers design patterns for staging data and for loading data into fact and dimension tables. In addition, this course describes how to enhance ETL packages with data cleansing techniques and offers insight into the buffer architecture of the data flow engine to hep package developers get the best performance from packages. This course was written for SQL Server 2012 Integration Services, but most principles apply to SQL Server 2005 and later.