- Course

Integration Services Fundamentals

This course is for business intelligence developers and database professionals responsible for moving, manipulating, and integrating data and assumes no prior experience with Integration Services

- Course

Integration Services Fundamentals

This course is for business intelligence developers and database professionals responsible for moving, manipulating, and integrating data and assumes no prior experience with Integration Services

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Core Tech

What you'll learn

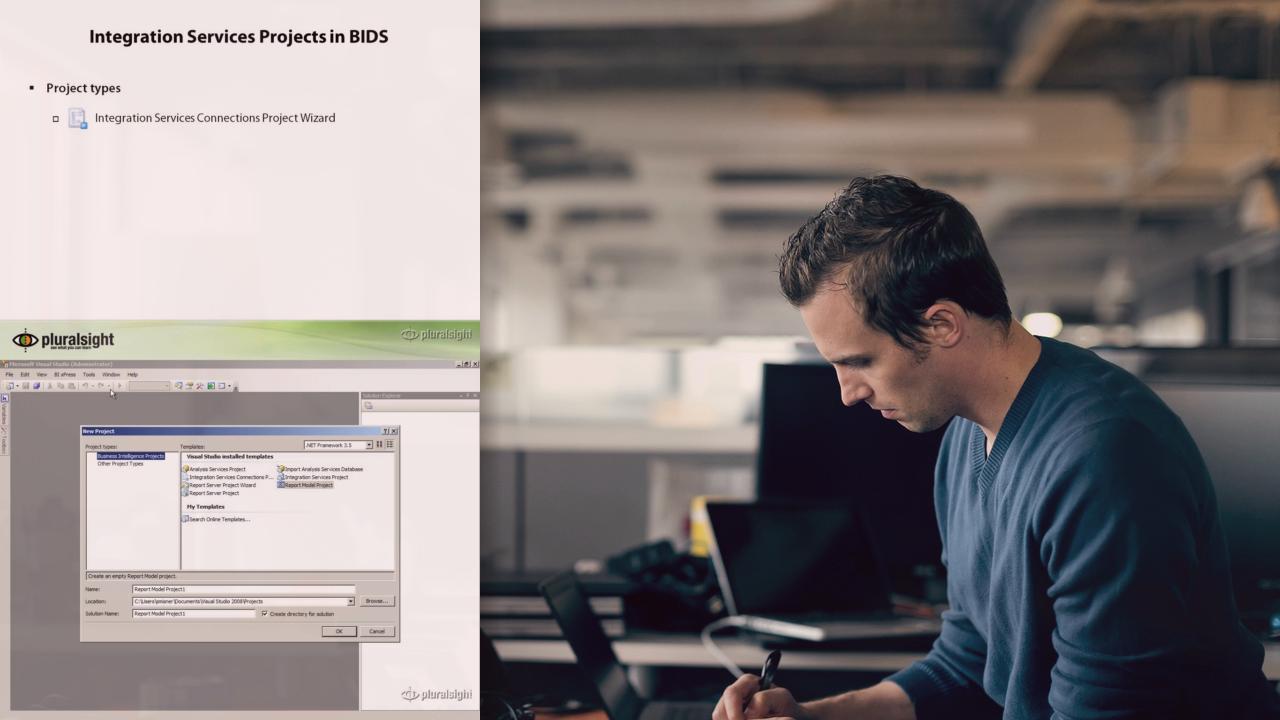

This course explains how to develop Integration Services packages with an emphasis on the development of processes that support data warehousing. It begins by describing the overall process of package development, then describes the types of tasks that Integration Services and shows how these tasks can be performed sequentially or in parallel in the control flow by using precedence constraints. Then the course continues by reviewing the data flow components that are used for extract, transform, and load processes. In addition, the course covers the Integration Services expression language and scripting, and demonstrates how to debug packages, configure logging, manage transactions, and manage package restarts. It also describes how to automate the execution of packages. The features and demonstrations in this course focus on the SQL Server 2008 R2 release, although most topics also apply to earlier versions of Integration Services.