- Course

Web Crawling and Scraping Using Rcrawler

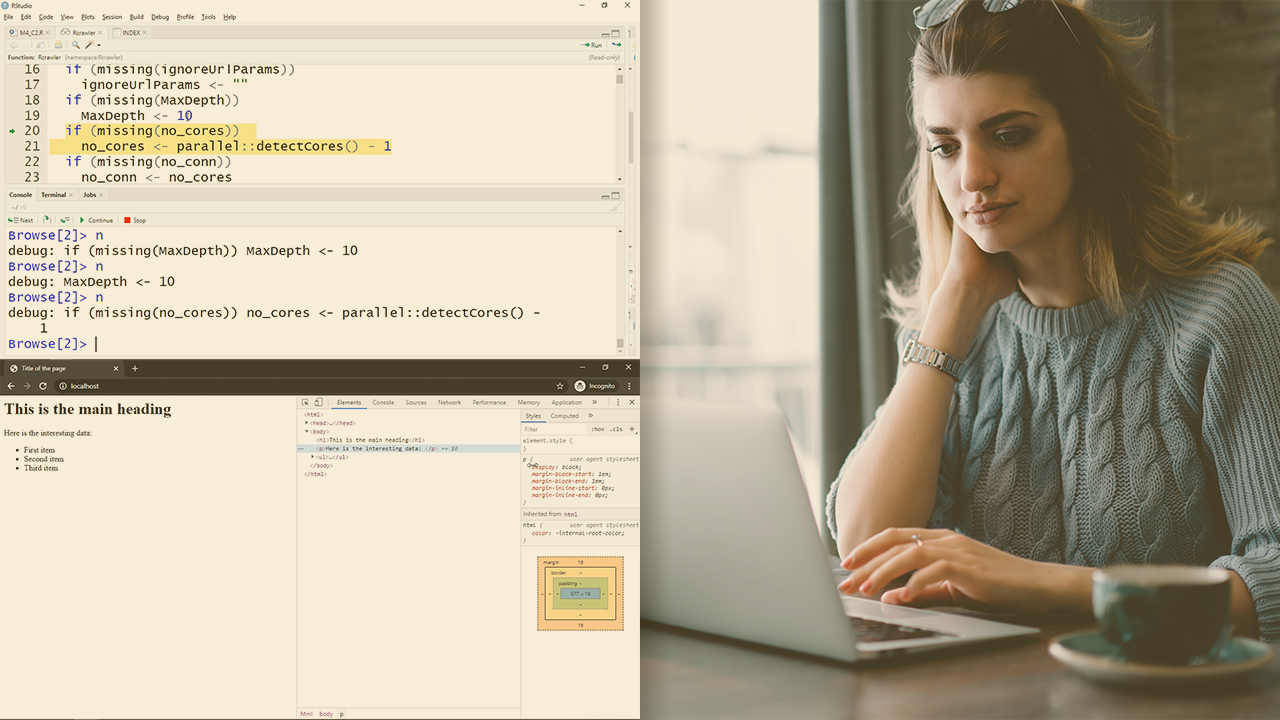

Data is often available on web pages, requiring extra effort and caution to retrieve it. This course is about the Rcrawler package which is a web crawler and scraper that you can use in your R projects.

- Course

Web Crawling and Scraping Using Rcrawler

Data is often available on web pages, requiring extra effort and caution to retrieve it. This course is about the Rcrawler package which is a web crawler and scraper that you can use in your R projects.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Data

What you'll learn

How can you get the data you need from a website into your R projects? How about automating it using the Rcrawler package? In this course, Web Crawling and Scraping Using Rcrawler, you will cover the Rcrawler package in three steps. First, you will go over some basic concepts, structures of a web page, and examples to get the big picture. Next, you will discover some implications of crawling and how to avoid risks. Finally, you will explore topics such as how to get the data you need from a web page, how to get the web pages you need from a large website, and how to troubleshoot Rcrawler. When you're finished with this course, you'll have the skills and knowledge of Rcrawler needed to help automate the process of retrieving data from web pages.