- Course

Applied Classification with XGBoost 1

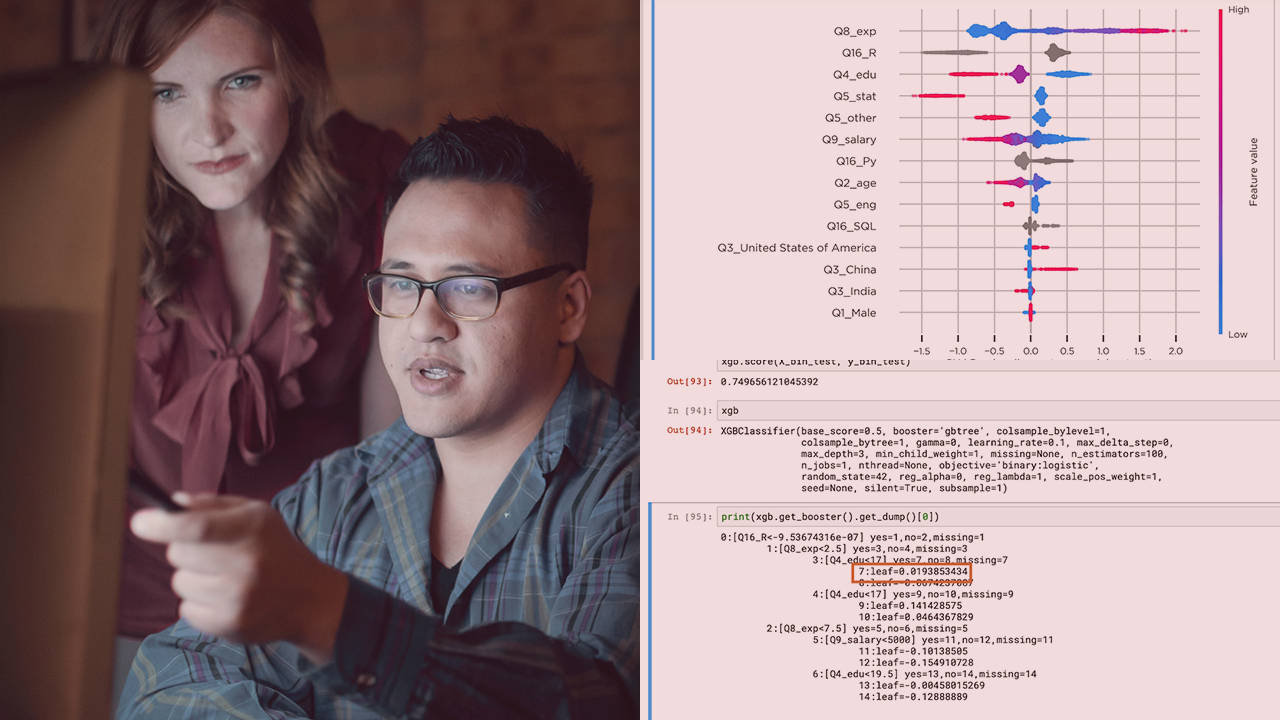

Using Jupyter notebook demos, you'll experience preliminary exploratory data analysis. You will create a classification model with XGBoost. Using third-party libraries, you will explore feature interactions, and explaining the models.

- Course

Applied Classification with XGBoost 1

Using Jupyter notebook demos, you'll experience preliminary exploratory data analysis. You will create a classification model with XGBoost. Using third-party libraries, you will explore feature interactions, and explaining the models.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Data

What you'll learn

Are you a data professional who needs a complete, end-to-end classification demonstration of XGBoost and the libraries surrounding it? In this course, Applied Classification with XGBoost, you'll get introduced to the popular XGBoost library, an advanced ML tool for classification and regression. First, you'll explore the underpinnings of the XGBoost algorithm, see a base-line model, and review the decision tree. Next, you'll discover how boosting works using Jupyter Notebook demos, as well as see preliminary exploratory data analysis in action. Finally, you'll learn how to create, evaluate, and explain data using third party libraries. You won't be using the Iris or Titanic data-set, you'll use real survey data! By the end of this course, you'll be able to take raw data, prepare it, model a classifier, and explore the performance of it. Using the provided notebook, you can follow along on your own machine, or take and adapt the code to your needs.