- Course

Building Your First ETL Pipeline Using Azure Databricks

In this course, you will learn about the Spark based Azure Databricks platform, see how to setup the environment, quickly build extract, transform, and load steps of your data pipelines, orchestrate it end-to-end, and run it automatically and reliably.

- Course

Building Your First ETL Pipeline Using Azure Databricks

In this course, you will learn about the Spark based Azure Databricks platform, see how to setup the environment, quickly build extract, transform, and load steps of your data pipelines, orchestrate it end-to-end, and run it automatically and reliably.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Cloud

- Data

What you'll learn

With an exponential growth in data volumes, increase in types of data sources, faster data processing needs and dynamically changing business requirements, traditional ETL tools are facing the challenge to keep up to the needs of modern data pipelines. While Apache Spark is very popular for big data processing and can help us overcome these challenges, managing the Spark environment is no cakewalk.

In this course, Building Your First ETL Pipeline Using Azure Databricks, you will gain the ability to use the Spark based Databricks platform running on Microsoft Azure, and leverage its features to quickly build and orchestrate an end-to-end ETL pipeline. And all this while learning about collaboration options and optimizations that it brings, but without worrying about the infrastructure management.

First, you will learn about the fundamentals of Spark, about the Databricks platform and features, and how it is runs on Microsoft Azure.

Next, you will discover how to setup the environment, like workspace, clusters and security, and build each phase of extract, transform and load separately, to implement the dimensional model.

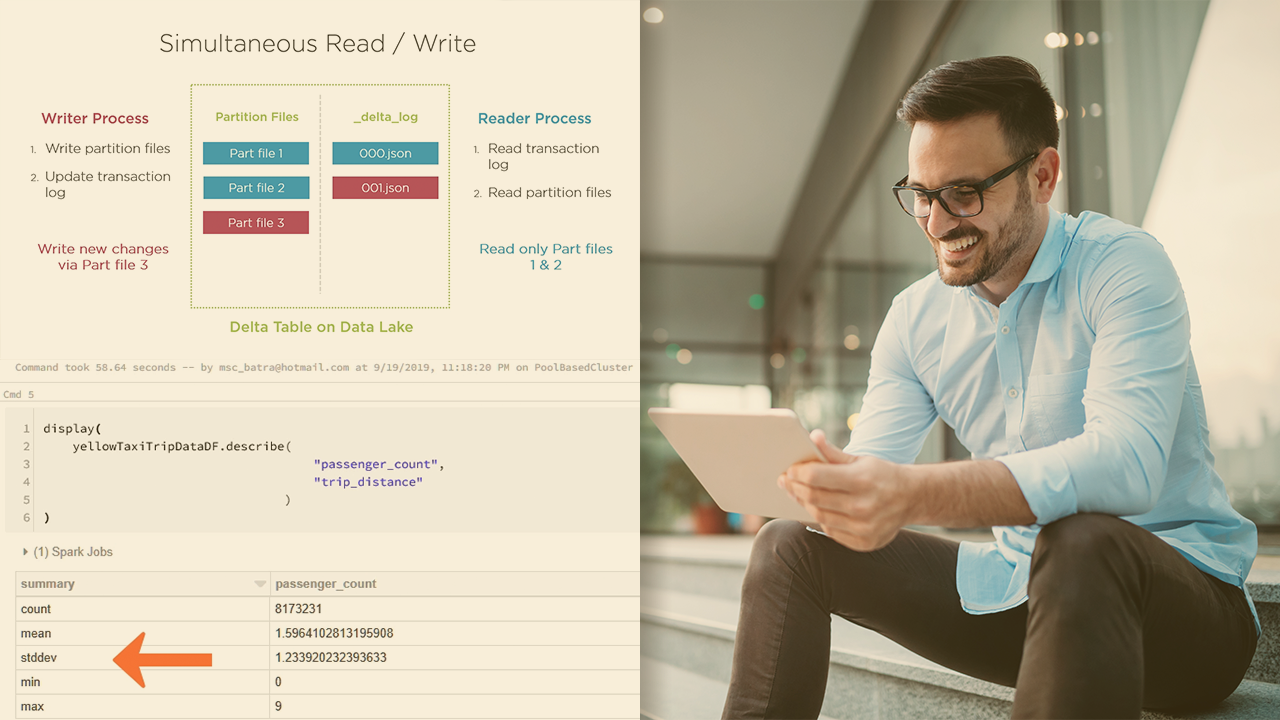

Finally, you will explore how to orchestrate that using Databricks jobs and Azure Data Factory, followed by other features, like Databricks APIs and Delta Lake, to help you build automated and reliable data pipelines.

When you’re finished with this course, you will have the skills and knowledge of Azure Databricks platform needed to build and orchestrate an end-to-end ETL pipeline.