- Course

Building Features from Nominal Data

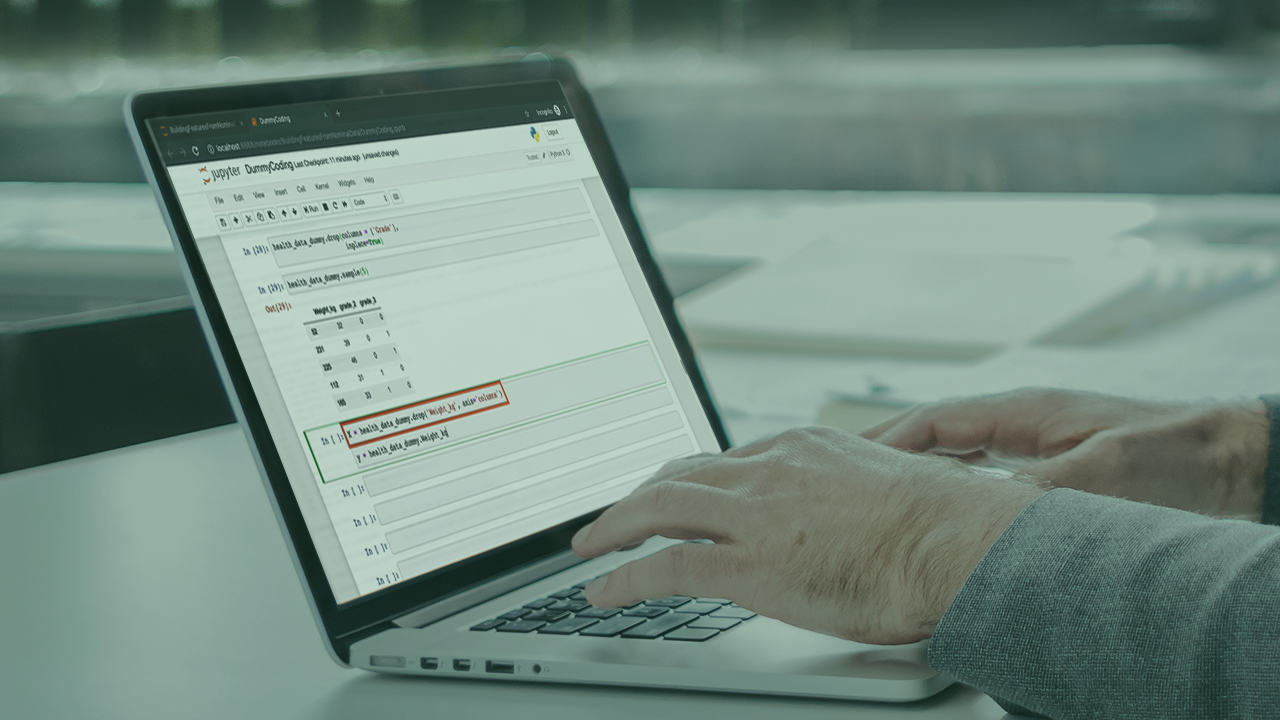

This course covers various techniques for encoding categorical data, starting with the familiar forms of one-hot and label encoding, before moving to contrast coding schemes such as simple coding, Helmert coding, and orthogonal polynomial coding.

- Course

Building Features from Nominal Data

This course covers various techniques for encoding categorical data, starting with the familiar forms of one-hot and label encoding, before moving to contrast coding schemes such as simple coding, Helmert coding, and orthogonal polynomial coding.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- AI

- Data

What you'll learn

The quality of preprocessing the numeric data is subjected to the important determinant of the results of machine learning models built using that data. In this course, Building Features from Nominal Data, you will gain the ability to encode categorical data in ways that increase the statistical power of models. First, you will learn the different types of continuous and categorical data, and the differences between ratio and interval scale data, and between nominal and ordinal data. Next, you will discover how to encode categorical data using one-hot and label encoding, and how to avoid the dummy variable trap in linear regression. Finally, you will explore how to implement different forms of contrast coding - such as simple, Helmert, and orthogonal polynomial coding, so that regression results closely mirror the hypotheses that you wish to test. When you’re finished with this course, you will have the skills and knowledge of encoding categorical data needed to increase the statistical power of linear regression that includes such data.

Building Features from Nominal Data

-

Version Check | 16s

-

Module Overview | 1m 15s

-

Prerequisites and Course Outline | 1m 34s

-

Continuous and Categorical Data | 4m 9s

-

Numeric Data | 5m 4s

-

Categorical Data | 3m 30s

-

Label Encoding and One-hot Encoding | 3m 34s

-

Choosing between Label Encoding and One-hot Encoding | 4m 11s

-

Types of Classification Tasks | 4m 32s

-

One-hot Encoding with Known and Unknown Categories | 5m 11s

-

One-hot Encoding on a Pandas Data Frame Column | 2m 26s

-

One-hot Encoding Using pd.get_dummies() | 1m 8s

-

Label Encoding to Convert Categorical Data to Ordinal | 6m 6s

-

Label Binarizer to Perform One vs. Rest Encoding of Targets | 4m 10s

-

Multilabel Binarizer for Encoding Multilabel Targets | 2m 22s

-

Module Summary | 1m 16s