- Course

Designing Scalable Data Architectures on the Google Cloud

This course focuses on the end-to-end design of a cloud architecture, specifically from the perspective of optimizing that architecture for Big Data processing, real-time analytics, and real-time prediction using ML and AI.

- Course

Designing Scalable Data Architectures on the Google Cloud

This course focuses on the end-to-end design of a cloud architecture, specifically from the perspective of optimizing that architecture for Big Data processing, real-time analytics, and real-time prediction using ML and AI.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Cloud

What you'll learn

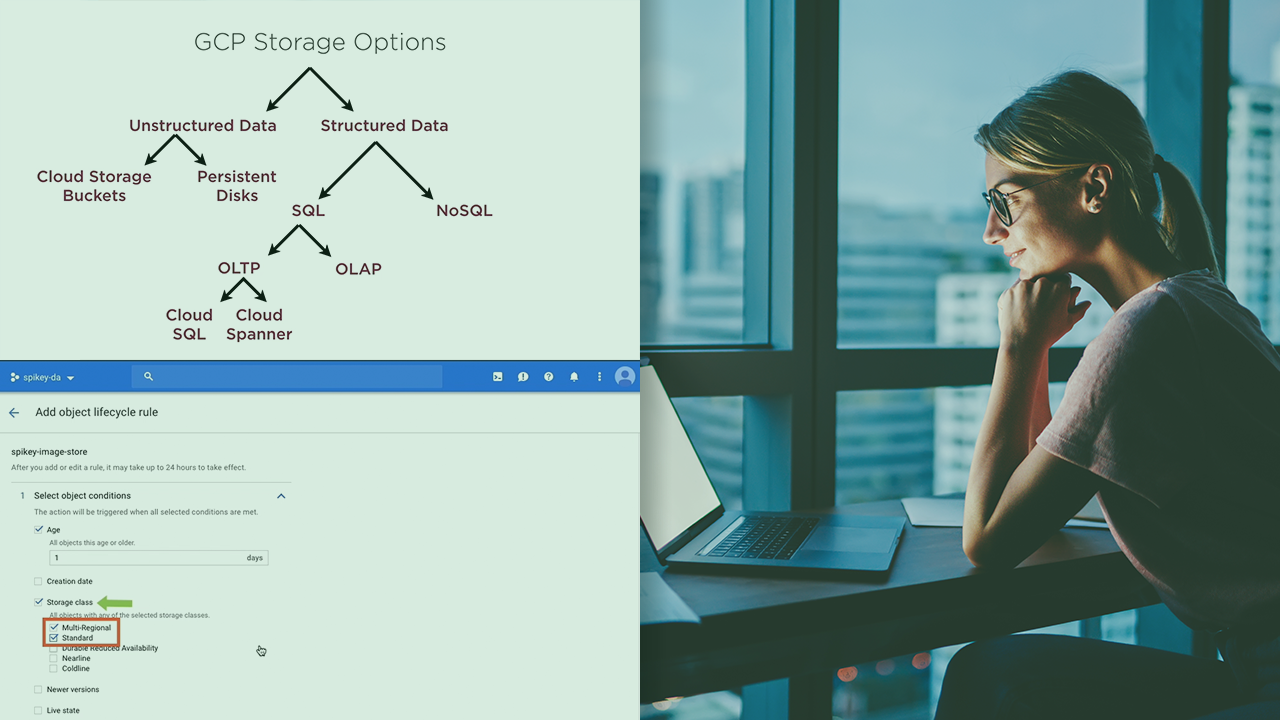

The Google Cloud Platform offers up a very large number of services, for every important aspect of public cloud computing. This array of services and choices can often seem intimidating - even a practitioner who understands several important services might have trouble connecting the dots, as it were, and fitting together those services in meaningful ways. In this course, Designing Scalable Data Architectures on the Google Cloud, you will gain the ability to design lambda and kappa architectures that integrate batch and streaming, plan intelligent migration and disaster-recovery strategies, and pick the right ML workflow for your enterprise. First, you will learn why the right choice of stream processing architecture is becoming key to the entire design of a cloud-based infrastructure. Next, you will discover how the Transfer Service is an invaluable tool in planning both migration and disaster-recovery strategies on the GCP. Finally, you will explore how to pick the right Machine Learning technology for your specific use. When you’re finished with this course, you will have the skills and knowledge of the entire cross-section of Big Data and Machine Learning offerings on the GCP to build cloud architectures that are optimized for scalability, real-time processing, and the appropriate use of Deep Learning and AI technologies.

Designing Scalable Data Architectures on the Google Cloud

-

Module Overview | 1m 54s

-

Prerequisites and Course Outline | 2m 32s

-

Changing Architectural Considerations in the Real World | 3m 51s

-

Discussion: Using and Building ML Models, Querying Data | 3m 58s

-

Discussion: Training Serving Skew, a Multi Cloud World, Lambda, and Kappa Architectures | 5m 13s

-

Lambda and Kappa Architectures | 5m 21s

-

Setting up a GCS Bucket, PubSub Topic, and BigQuery Table | 5m 46s

-

Implementing Integrated Processing for Batch and Streaming | 6m 10s

-

Executing the Pipeline for Batch and Stream Processing | 5m 1s