- Course

HDInsight Deep Dive: Storm, HBase, and Hive

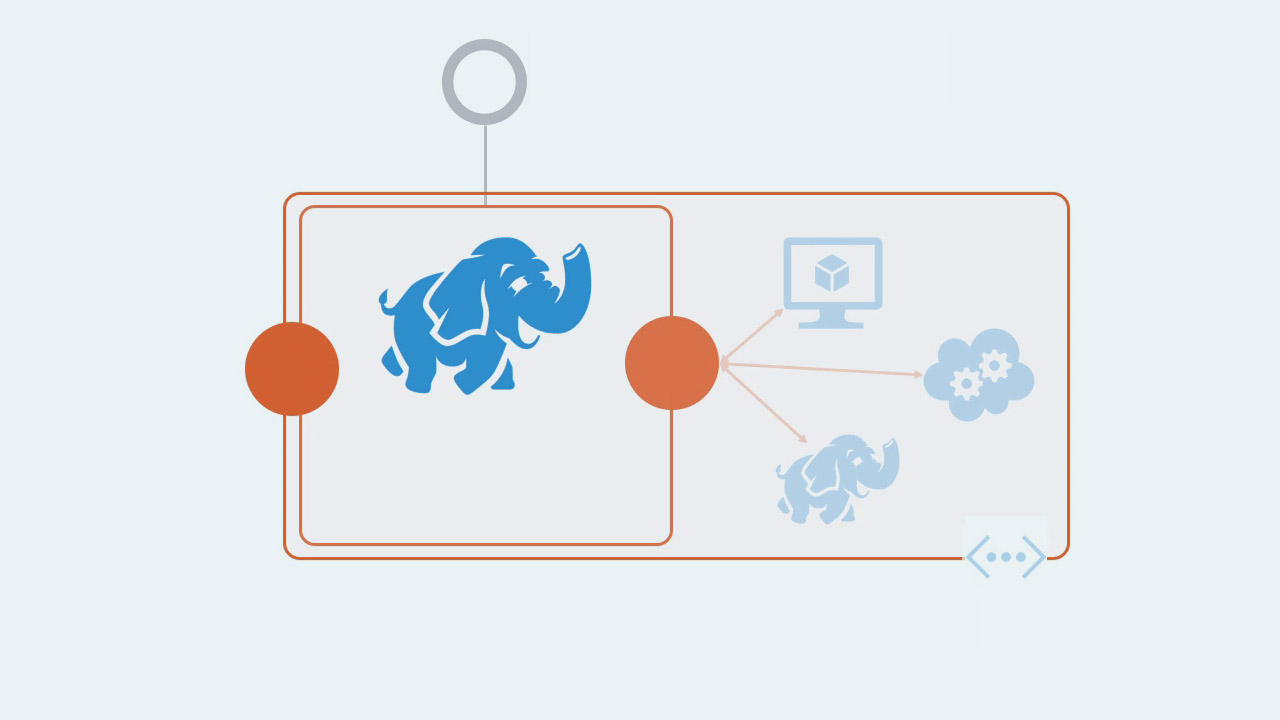

HDInsight is Microsoft's managed Big Data stack in the cloud. With Azure you can provision clusters running Storm, HBase, and Hive which can process thousands of events per second, store petabytes of data, and give you a SQL-like interface to query it all. In this course, we'll build out a full solution using the stack and take a deep dive into each of the technologies.

- Course

HDInsight Deep Dive: Storm, HBase, and Hive

HDInsight is Microsoft's managed Big Data stack in the cloud. With Azure you can provision clusters running Storm, HBase, and Hive which can process thousands of events per second, store petabytes of data, and give you a SQL-like interface to query it all. In this course, we'll build out a full solution using the stack and take a deep dive into each of the technologies.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Cloud

- Data

What you'll learn

Storm is a distributed compute platform which you can plug into Azure Event Hubs and use to power event stream processing. You can scale Storm to read tens of thousands of events per second and build a reliable workflow so that every event is guaranteed to be processed. HBase is a No-SQL database which is easy to get started with and can store tables with billions of rows and millions of columns. It's for real-time data access and it has a REST interface so you can read and write HBase data from a .NET Storm app. Hive is a data warehouse that provides a SQL-like interface over Big Data - HBase tables, and other sources. With Hive you can join across multiple sources and run queries from PowerShell and .NET. In this course, we use all three technologies running on Microsoft Azure to build a race timing solution and dive into performance tuning, reliability, and administration.

HDInsight Deep Dive: Storm, HBase, and Hive

-

Module Introduction | 2m 2s

-

HBase Table Design | 4m 5s

-

Modeling Relationships | 3m 2s

-

Row Key Design | 2m 55s

-

Column Families | 3m 48s

-

HBase on Azure | 2m 42s

-

HBase Shell Scripts | 3m 7s

-

The Stargate REST API | 4m 14s

-

.NET Clients for Stargate | 3m 10s

-

Storing Timing Events | 4m 6s

-

Storing Sector Times | 3m 47s

-

Module Summary | 3m 40s