- Course

Natural Language Processing with PyTorch

This course covers the use of advanced neural network constructs and architectures, such as recurrent neural networks, word embeddings, and bidirectional RNNs, to solve complex word and language modeling problems using PyTorch.

- Course

Natural Language Processing with PyTorch

This course covers the use of advanced neural network constructs and architectures, such as recurrent neural networks, word embeddings, and bidirectional RNNs, to solve complex word and language modeling problems using PyTorch.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Data

What you'll learn

From chatbots to machine-generated literature, some of the hottest applications of ML and AI these days are for data in textual form. In this course, Natural Language Processing with PyTorch, you will gain the ability to design and implement complex text processing models using PyTorch, which is fast emerging as a popular choice for building deep-learning models owing to its flexibility, ease-of-use, and built-in support for optimized hardware such as GPUs. First, you will learn how to leverage recurrent neural networks (RNNs) to capture sequential relationships within text data. Next, you will discover how to express text using word vector embeddings, a sophisticated form of encoding that is supported by out-of-the-box in PyTorch via the torchtext utility. Finally, you will explore how to build complex multi-level RNNs and bidirectional RNNs to capture both backward and forward relationships within data. You will round out the course by building sequence-to-sequence RNNs for language translation. When you are finished with this course, you will have the skills and knowledge to design and implement complex natural language processing models using sophisticated recurrent neural networks in PyTorch.

Natural Language Processing with PyTorch

-

Version Check | 16s

-

Module Overview | 1m 11s

-

Prerequisites and Course Outline | 1m 38s

-

RNNs for Natural Language Processing | 3m 50s

-

Recurrent Neurons | 4m 40s

-

Back Propagation through Time | 4m 34s

-

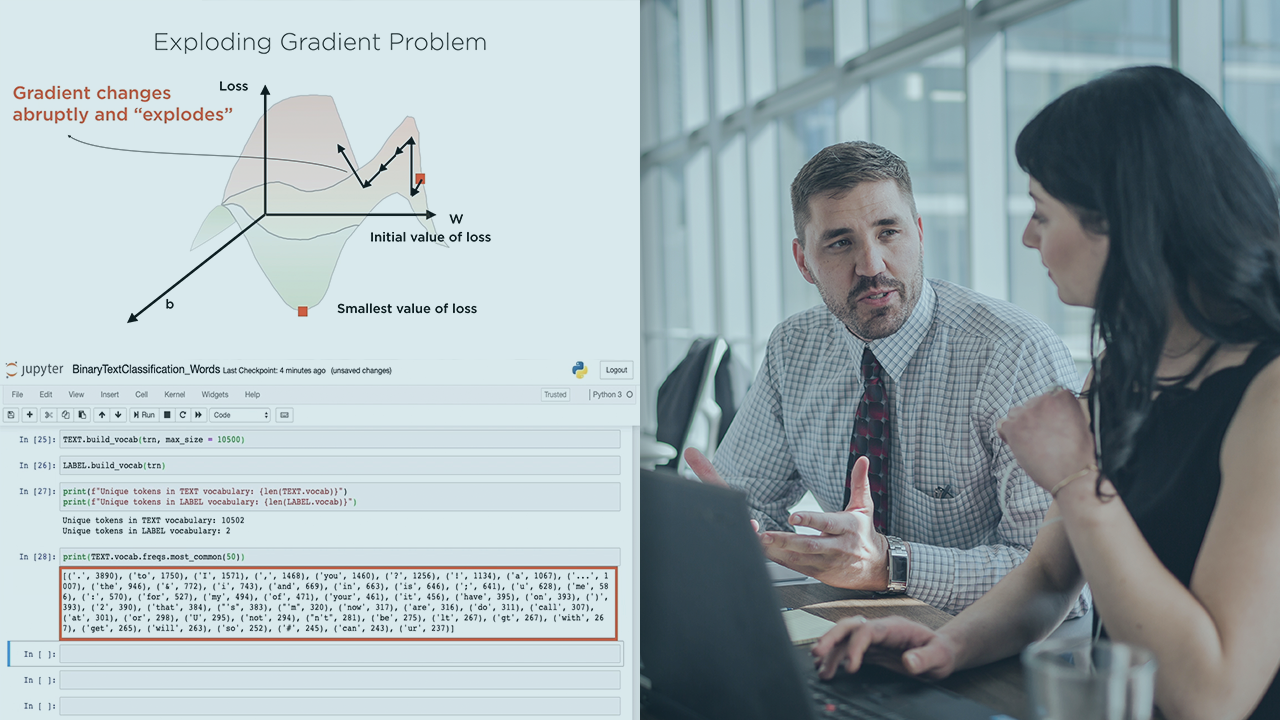

Coping with Vanishing and Exploding Gradients | 6m 18s

-

Long Memory Cells | 6m 38s

-

Module Summary | 1m 31s