- Course

Monitor Apache Spark Clusters

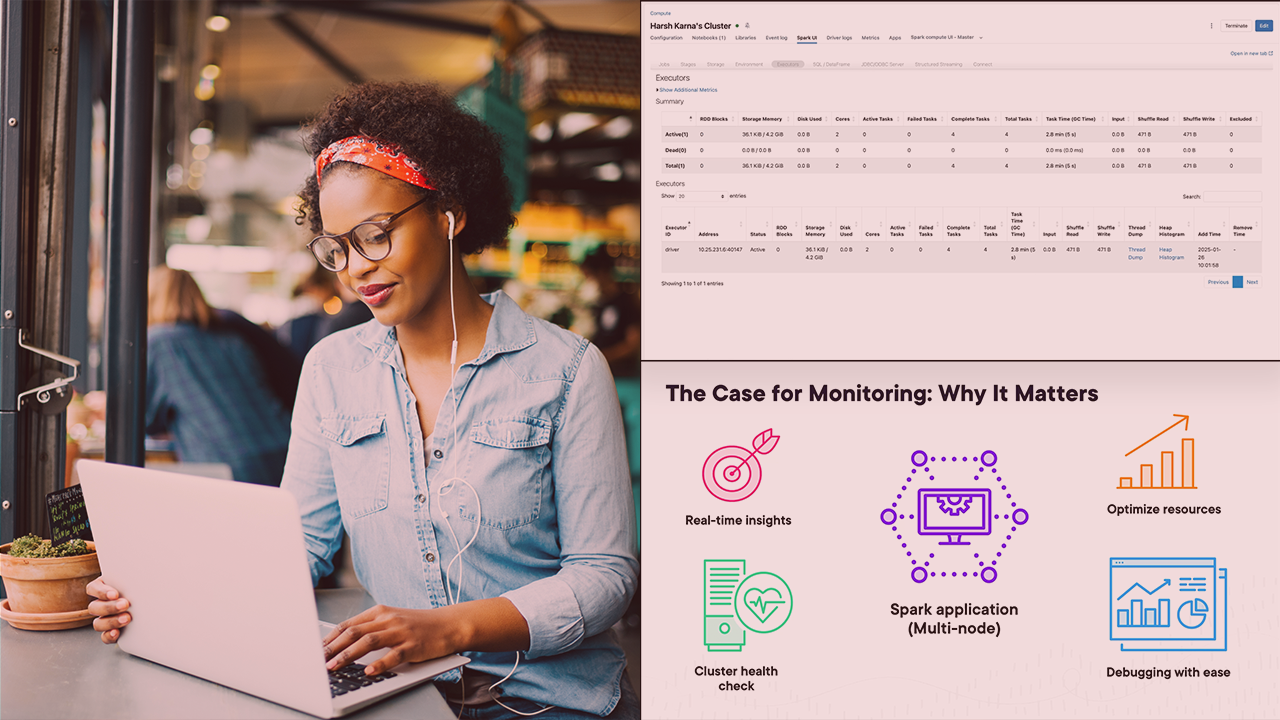

Monitoring Apache Spark clusters is vital for efficient performance. This course will teach you how to effectively monitor and debug Apache Spark clusters using the Spark Web UI.

- Course

Monitor Apache Spark Clusters

Monitoring Apache Spark clusters is vital for efficient performance. This course will teach you how to effectively monitor and debug Apache Spark clusters using the Spark Web UI.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Data

What you'll learn

Monitoring Apache Spark clusters is essential for identifying bottlenecks, optimizing performance, and debugging distributed jobs.

In this course, Monitor Apache Spark Clusters, you’ll learn how to use the Spark Web UI to understand jobs, stages, and tasks in Spark.

You’ll explore monitoring PySpark and Spark SQL operations, observe shuffle and partitioning, track execution across multi-node clusters, learn to adjust node configurations using environment variables, and see the impact of these changes.

When you’re finished with this course, you’ll have a better understanding of how to monitor and debug Spark jobs, enabling you to optimize and troubleshoot your Spark applications effectively.