- Course

Work with RDDs, DataFrames, and Datasets in Apache Spark

Understanding RDDs in Apache Spark allows practitioners to understand how data is represented in the platform. Learn the fundamental differences between DataFrames and higher level APIs when dealing with datasets and transformations.

- Course

Work with RDDs, DataFrames, and Datasets in Apache Spark

Understanding RDDs in Apache Spark allows practitioners to understand how data is represented in the platform. Learn the fundamental differences between DataFrames and higher level APIs when dealing with datasets and transformations.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Data

What you'll learn

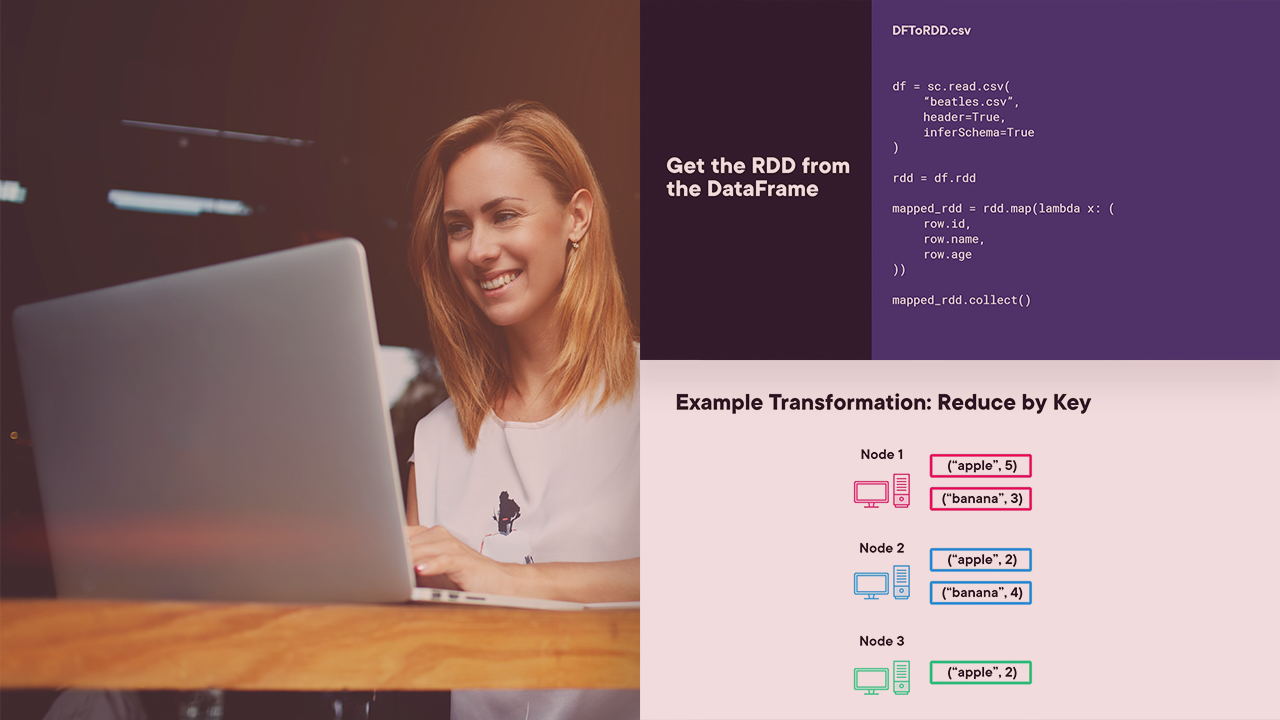

RDDs and their immutability properties are needed in order to understand why these building blocks are used when processing large amounts of data in a parallel processing environment.

In this course, Work with RDDs, DataFrames, and Datasets in Apache Spark, you’ll learn the difference between RDDs and DataFrames, when to use each one when representing data, and how they are processed underneath the hood with Apache Spark.

You'll understand how these work, which will help you gain a better grasp on how big data processing is done in a platform such as Apache Spark and what it means for efficiency when transforming data to something meaningful.

When you’re finished with this course, you’ll have a better understanding of how RDDs represent data in Apache Spark and when to use DataFrames over them when doing big data processing.