- Course

Employing Ensemble Methods with scikit-learn

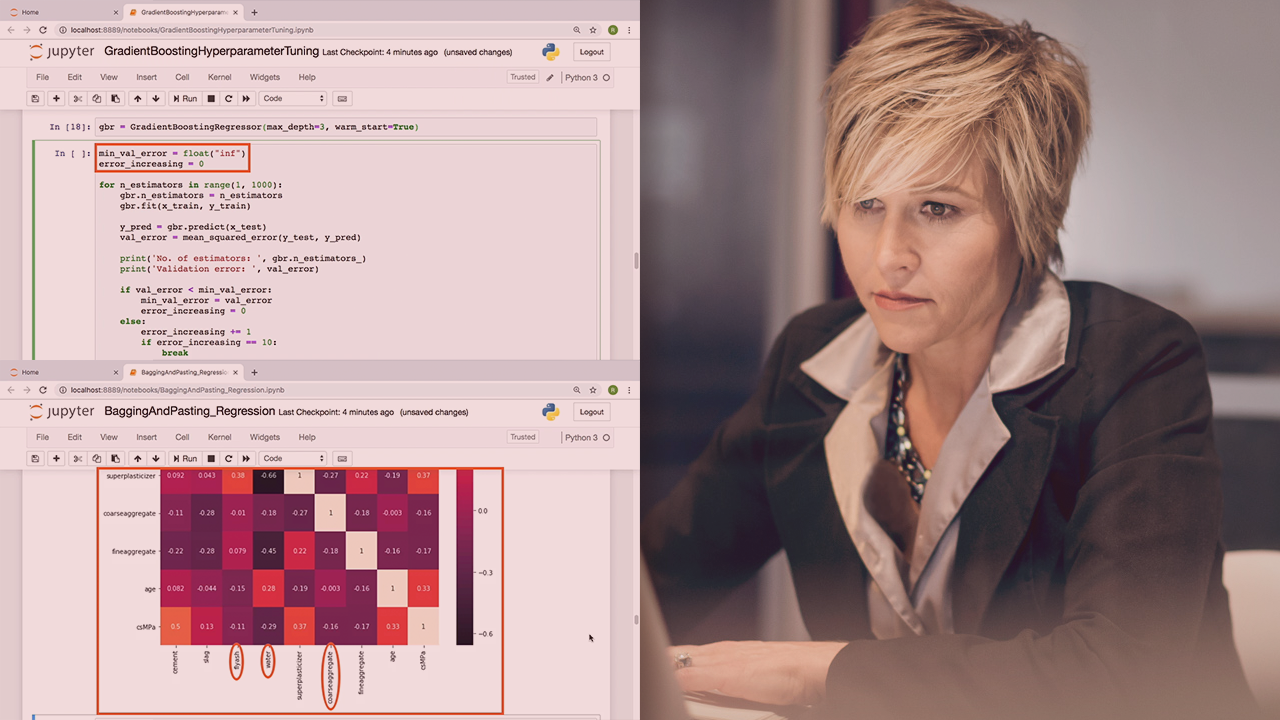

This course covers the theoretical and practical aspects of building ensemble learning solutions in scikit-learn; from random forests built using bagging and pasting to adaptive and gradient boosting and model stacking and hyperparameter tuning.

- Course

Employing Ensemble Methods with scikit-learn

This course covers the theoretical and practical aspects of building ensemble learning solutions in scikit-learn; from random forests built using bagging and pasting to adaptive and gradient boosting and model stacking and hyperparameter tuning.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- AI

- Data

What you'll learn

Even as the number of machine learning frameworks and libraries increases on a daily basis, scikit-learn is retaining its popularity with ease. In particular, scikit-learn features extremely comprehensive support for ensemble learning, an important technique to mitigate overfitting. In this course, Employing Ensemble Methods with scikit-learn, you will gain the ability to construct several important types of ensemble learning models. First, you will learn decision trees and random forests are ideal building blocks for ensemble learning, and how hard voting and soft voting can be used in an ensemble model. Next, you will discover how bagging and pasting can be used to control the manner in which individual learners in the ensemble are trained. Finally, you will round out your knowledge by utilizing model stacking to combine the output of individual learners. When you’re finished with this course, you will have the skills and knowledge to design and implement sophisticated ensemble learning techniques using the support provided by the scikit-learn framework.

Employing Ensemble Methods with scikit-learn

-

Version Check | 16s

-

Module Overview | 1m 10s

-

Prerequisites and Course Outline | 1m 43s

-

A Quick Overview of Ensemble Learning | 6m 20s

-

Averaging and Boosting, Voting and Stacking | 6m 37s

-

Decision Trees in Ensemble Learning | 3m 16s

-

Understanding Decision Trees | 3m 21s

-

Overfitted Models and Ensemble Learning | 5m 24s

-

Getting Started and Exploring the Environment | 2m 2s

-

Exploring the Classification Dataset | 6m 33s

-

Hard Voting | 5m 3s

-

Soft Voting | 4m 12s

-

Module Summary | 1m 22s