- Course

Deploying Containerized Workloads Using Google Cloud Kubernetes Engine

This course deals with the Google Kubernetes Engine, the most robust and seamless way to run containerized workloads on the GCP. Cluster creation, the use of volume storage abstractions, and ingress and service objects are all covered in this course.

- Course

Deploying Containerized Workloads Using Google Cloud Kubernetes Engine

This course deals with the Google Kubernetes Engine, the most robust and seamless way to run containerized workloads on the GCP. Cluster creation, the use of volume storage abstractions, and ingress and service objects are all covered in this course.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Cloud

What you'll learn

Running Kubernetes clusters on the cloud involves working with a variety of technologies, including Docker, Kubernetes, and GCE Compute Engine Virtual Machine instances. This can sometimes get quite involved.

In this course, Deploying Containerized Workloads Using Google Cloud Kubernetes Engine, you will learn how to deploy and configure clusters of VM instances running your Docker containers on the Google Cloud Platform using the Google Kubernetes Service.

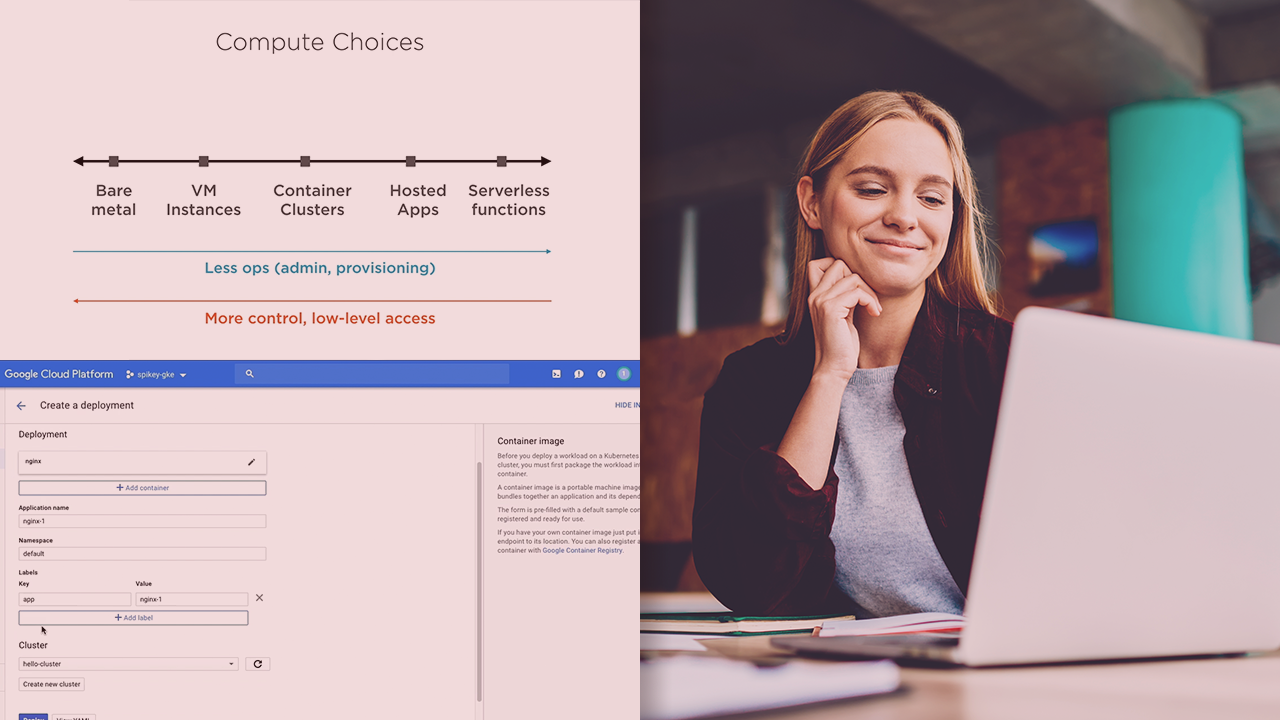

First, you will learn where GKE fits relative to other GCP compute options such as GCE VMs, App Engine, and Cloud Functions. You will understand fundamental building blocks in Kubernetes, such as pods, nodes and node pools, and how these relate to the fundamental building blocks of Docker, namely containers. Pods, ReplicaSets, and Deployments are core Kubernetes concepts, and you will understand each of these in detail.

Next, you will discover how to create, manage, and scale clusters using the Horizontal Pod Autoscaler (HPA). You will also learn about StatefulSets and DaemonSets on the GKE.

Finally, you will explore how to share states using volume abstractions, and field user requests using service and ingress objects. You will see how custom Docker images are built and placed in the Google Container Registry, and learn a new and advanced feature, binary authorization.

When you’re finished with this course, you will have the skills and knowledge of the Google Kubernetes Engine needed to construct scalable clusters running Docker containers on the GCP.

Deploying Containerized Workloads Using Google Cloud Kubernetes Engine

-

Module Overview | 1m 32s

-

Prerequisites and Course Outline | 3m 6s

-

Introducing Containers | 5m 23s

-

Introducing Kubernetes | 6m 38s

-

Clusters, Nodes, Node Pools, and Node Images | 3m 52s

-

Pods | 7m 4s

-

Kubernetes as an Orchestrator | 3m 46s

-

Replication and Deployment | 4m

-

Services | 2m 15s

-

Volume Abstractions | 4m 5s

-

Load Balancers | 2m 35s

-

Ingress | 1m 54s

-

StatefulSets and DaemonSets | 1m 58s

-

Horizontal Pod Autoscaler | 3m 59s

-

Pricing | 2m 10s