- Course

Microsoft Azure Developer: Implementing Data Lake Storage Gen2

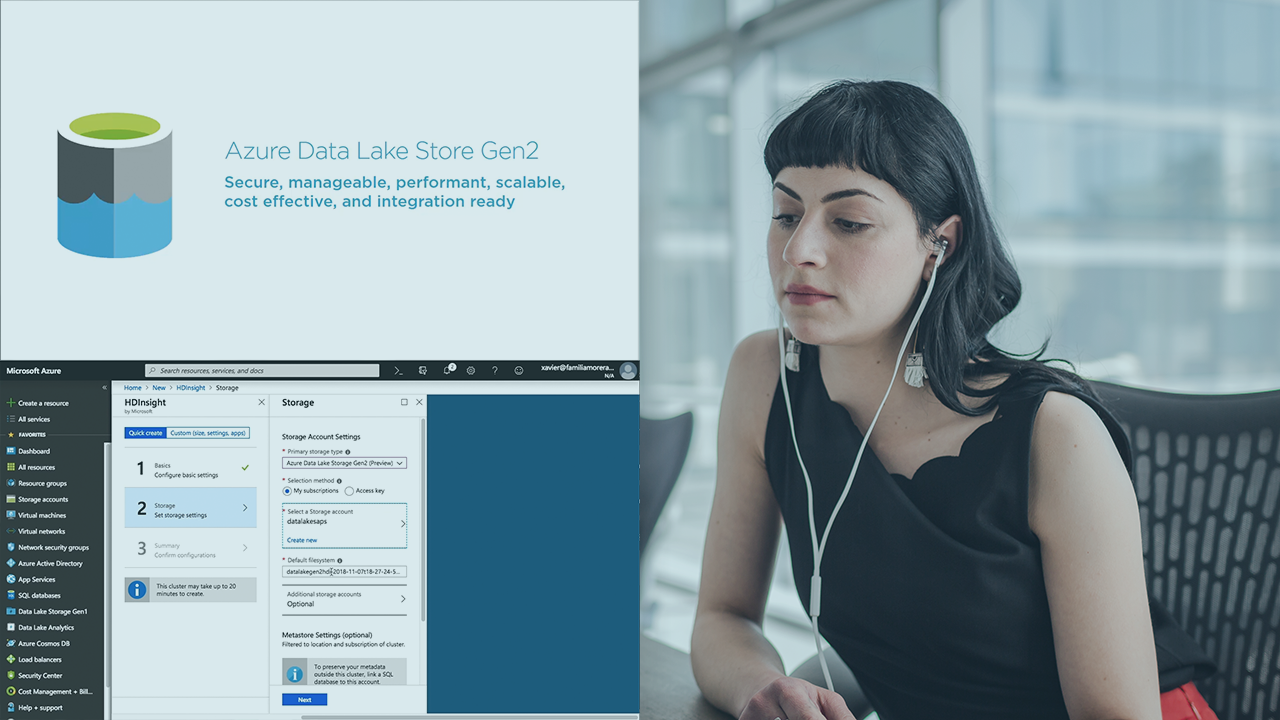

This course will teach you how to work with Microsoft Azure's cloud data repository built on top of Blob Storage: Data Lake Store Gen2.

- Course

Microsoft Azure Developer: Implementing Data Lake Storage Gen2

This course will teach you how to work with Microsoft Azure's cloud data repository built on top of Blob Storage: Data Lake Store Gen2.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Cloud

What you'll learn

Data lakes are used to hold vast amounts of data, a must when working with Big Data. In this course, Microsoft Azure Developer: Implementing Data Lake Storage Gen2, you will learn foundational knowledge and gain the ability to work with a large and HDFS-compliant data repository in Microsoft Azure. First, you will figure out how to ingest data. Next, you will discover how to manage and work with your Big Data. Finally, you will explore how to run jobs using a Hadoop cluster, using platforms like Spark with the use of the ABFS driver. When you're finished with this course, you will have the skills and knowledge of work with large data repositories in Microsoft's cloud, everything needed to build solutions at scale to help you discover trends and insights.

Microsoft Azure Developer: Implementing Data Lake Storage Gen2

-

Getting Started with Azure Data Lake Store Gen2 | 2m 13s

-

The Road to Azure Data Lake Store Gen2 | 5m 16s

-

Architecture and Features of ADLS Gen2 | 3m 15s

-

Demo: Creating an Azure Data Lake Store Gen2 with Portal | 4m 46s

-

Demo: Creating and Deleting an Azure Data Lake Store Gen 2 with PowerShell | 3m 4s

-

Takeaway | 58s