- Course

Deploying TensorFlow Models to AWS, Azure, and the GCP

This course will help the data scientist or engineer with a great ML model, built in TensorFlow, deploy that model to production locally or on the three major cloud platforms; Azure, AWS, or the GCP.

- Course

Deploying TensorFlow Models to AWS, Azure, and the GCP

This course will help the data scientist or engineer with a great ML model, built in TensorFlow, deploy that model to production locally or on the three major cloud platforms; Azure, AWS, or the GCP.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Data

What you'll learn

Deploying and hosting your trained TensorFlow model locally or on your cloud platform of choice - Azure, AWS or, the GCP, can be challenging. In this course, Deploying TensorFlow Models to AWS, Azure, and the GCP, you will learn how to take your model to production on the platform of your choice. This course starts off by focusing on how you can save the model parameters of a trained model using the Saved Model interface, a universal interface for TensorFlow models. You will then learn how to scale the locally hosted model by packaging all dependencies in a Docker container. You will then get introduced to the AWS SageMaker service, the fully managed ML service offered by Amazon. Finally, you will get to work on deploying your model on the Google Cloud Platform using the Cloud ML Engine. At the end of the course, you will be familiar with how a production-ready TensorFlow model is set up as well as how to build and train your models end to end on your local machine and on the three major cloud platforms. Software required: TensorFlow, Python.

Deploying TensorFlow Models to AWS, Azure, and the GCP

-

Module Overview | 1m 24s

-

Prerequisites and Course Overview | 2m 40s

-

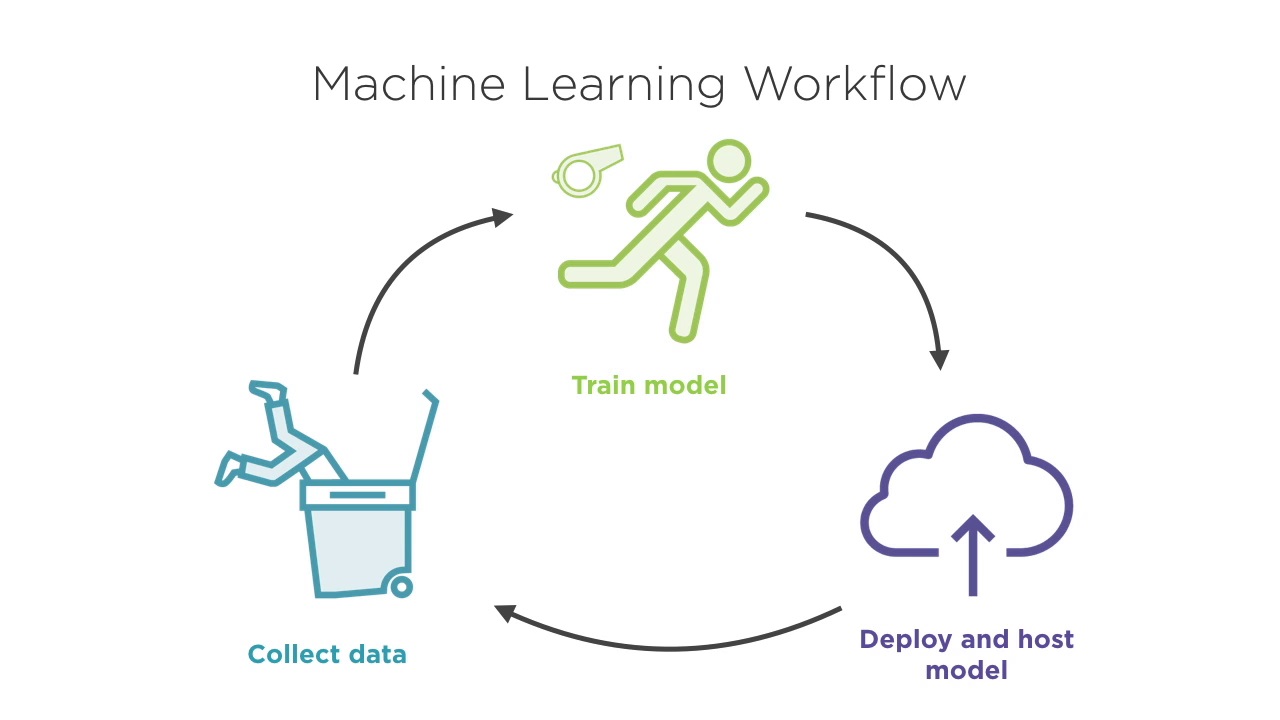

The Machine Learning Workflow: Local Serving | 2m 54s

-

Demo: Exploring the Churn Prediction Dataset | 3m 37s

-

Demo: Training and the Experiment Function | 3m 15s

-

The Saved Model | 2m 2s

-

The TensorFlow Model Server | 1m 31s

-

gRPC and Protocol Buffers | 1m 58s

-

Demo: Setting up the Azure VM | 3m 2s

-

Demo: Installing TensorFlow, gRPC, Serving APIs and the Model Server | 2m 52s

-

Demo: Deploying and Hosting the MNIST Classification Model | 3m 19s

-

Demo: Setting up the Churn Model | 2m 57s

-

Demo: Training and Saving the Model | 3m 43s

-

Demo: Making Predictions from a Saved Model | 5m 44s