- Course

Machine Learning with XGBoost Using scikit-learn in Python

XGBoost is the most winning supervised machine learning approach in competitive modeling on structured datasets. This course will teach you the basics of XGBoost, including basic syntax, functions, and implementing the model in the real world.

- Course

Machine Learning with XGBoost Using scikit-learn in Python

XGBoost is the most winning supervised machine learning approach in competitive modeling on structured datasets. This course will teach you the basics of XGBoost, including basic syntax, functions, and implementing the model in the real world.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Data

What you'll learn

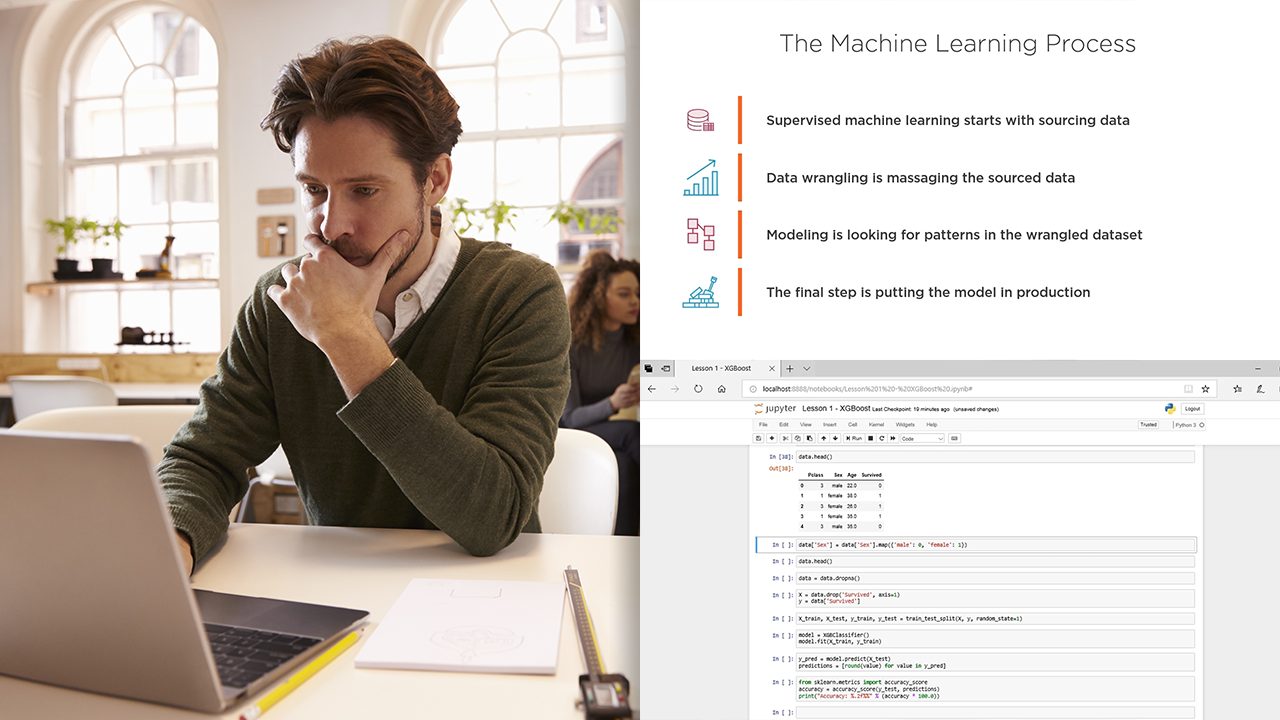

At the core of applied machine learning is supervised machine learning. In this course, Machine Learning with XGBoost Using scikit-learn in Python, you will learn how to build supervised learning models using one of the most accurate algorithms in existence. First, you will discover what XGBoost is and why it’s revolutionized competitive modeling. Next, you will explore the importance of data wrangling and see how clean data affects XGBoost’s performance. Finally, you will learn how to build, train, and score XGBoost models for real-world performance. When you are finished with this course, you will have a foundational knowledge of XGBoost that will help you as you move forward to becoming a machine learning engineer.