- Course

Implementing Vector Search with LlamaIndex

This course will teach you how to build a research assistant capable of reasoning and answering questions over document data.

- Course

Implementing Vector Search with LlamaIndex

This course will teach you how to build a research assistant capable of reasoning and answering questions over document data.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Core Tech

What you'll learn

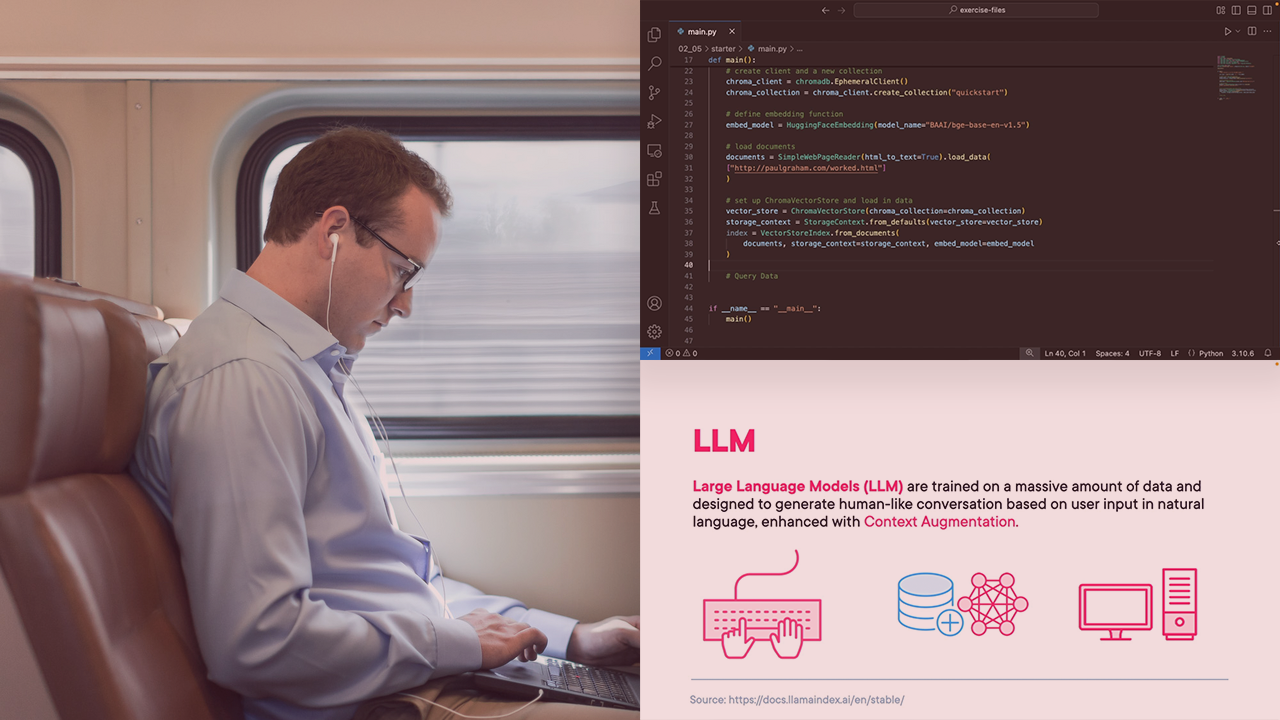

Using LLMs to perform complex tasks doesn't need to be difficult. In this course, Implementing Vector Search with LlamaIndex, you’ll learn to build a custom LLM-powered assistant with the ChromaDB vector store to load and search documents, and generate context-augmented outputs.

First, you’ll explore how to set up a vector store as an index to load and query data with a quickstart example.

Next, you’ll discover how to implement a ChromaDB vector store pipeline to generate content with augmented context.

Finally, you’ll learn how to create a LLM-powered and multi-step pipeline to perform multiple tasks.

When you’re finished with this course, you’ll have the skills and knowledge of Retrieval Augmented Generation (RAG) needed to design and implement an end-to-end LLM-powered query system that combines structured retrieval, advanced ranking techniques, and generative AI capabilities for real-world applications.