- Course

Build ETL Pipelines with PySpark

Learn how to build scalable ETL pipelines using PySpark for big data processing. This course will teach you how to extract, transform, and load data efficiently using PySpark, enabling you to handle large datasets with ease.

- Course

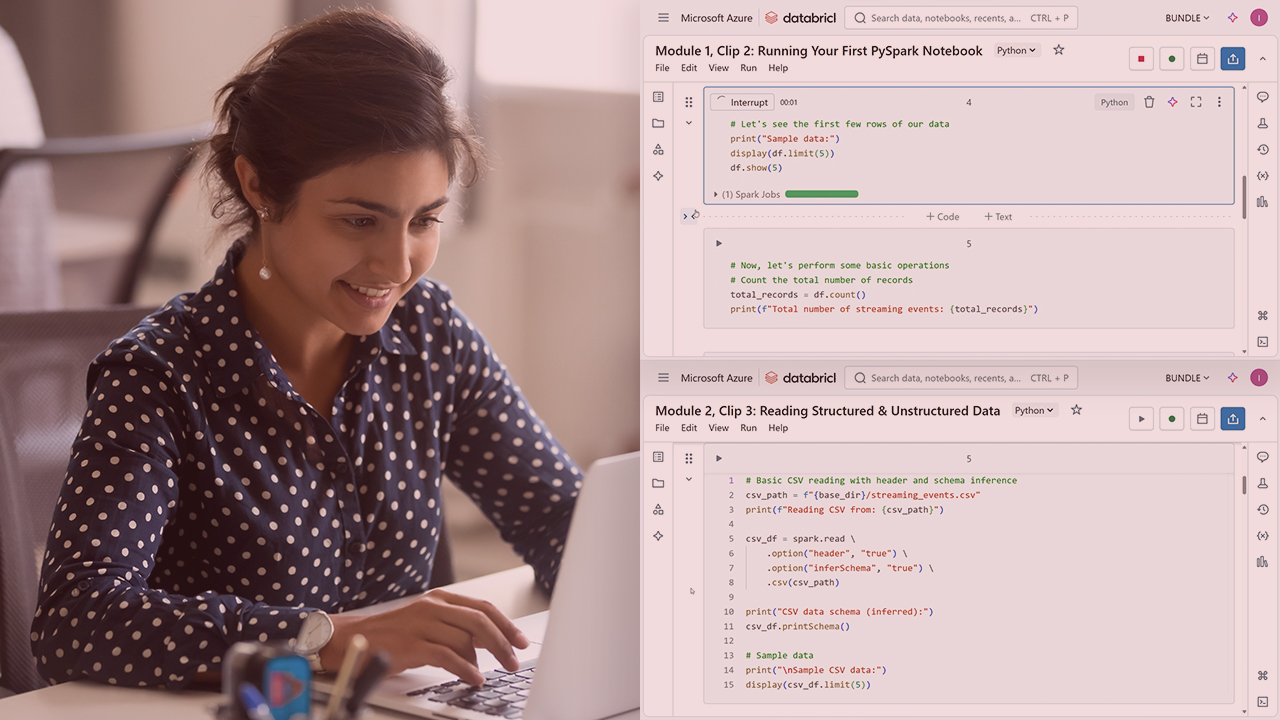

Build ETL Pipelines with PySpark

Learn how to build scalable ETL pipelines using PySpark for big data processing. This course will teach you how to extract, transform, and load data efficiently using PySpark, enabling you to handle large datasets with ease.

Get started today

Access this course and other top-rated tech content with one of our business plans.

Try this course for free

Access this course and other top-rated tech content with one of our individual plans.

This course is included in the libraries shown below:

- Data

What you'll learn

Handling large datasets with traditional ETL tools can be slow, inefficient, and difficult to scale. PySpark provides a powerful, distributed computing framework to process big data efficiently, but getting started can be challenging without the right guidance.

In this course, Build ETL Pipelines with PySpark, you’ll gain the ability to design and implement scalable ETL workflows using PySpark.

First, you’ll explore how to extract data from multiple sources, including structured and unstructured formats such as CSV, JSON, and Parquet.

Next, you’ll discover how to transform and clean data using PySpark’s powerful DataFrame operations, including filtering, aggregations, and handling missing values.

Finally, you’ll learn how to efficiently load processed data into various destinations, optimizing performance with partitioning, bucketing, and incremental updates.

When you’re finished with this course, you’ll have the skills and knowledge of PySpark ETL needed to build scalable, high-performance data pipelines for real-world applications.